There have been numerous advancement in ZFS over the years and it’s time for me to revisit this just in time for my VMware Lab revamp (more on this in a future post!).

My 3 year old Synology DS412+ is not just cutting it in keeping up with the workloads I’ve been churning in from the 3 x ESXi hosts.

Previous Setup

Synology DS412+

- 3 x 2 TB WD RED running RAID5

- 1 x 1GB NIC serving NFS

- 1 x 1GB NIC for general purpose Management / Media Streaming

Planned Setup

FreeNAS 9.3 (STABLE release) :

- Intel i3 2120 (3M Cache, 3.30 GHz)

- 8GB Memory

- SASL2P RAID CARD

- 4 x 450GB SAS 15K RPM (Primary volume)

- 1 x 64GB SSD Crucial M4 (for SLOG)

- Future (still waiting for the SAS break out cables) : 2 x 2TB NL-SAS 7.2K RPM (for Backup/ Tier 3 Workloads) –

QUICK ZFS 101

SLOG stands for Separate ZFS Intent Log. Think of it as Transaction Log for a traditional RDBM System. When not specified, the ZIL (ZFS Intent Log) gets written on the same pool which adds additional IO and thus not maximize the potential of the disk group. This is especially apparent in FreeNAS using NFS as, by default, all writes are Synchronous. Best practice is to place the ZIL on a separate SSD.

Now, I wanted it to have the most IOPs configuration so I set out and started doing different configuration.

Test Configuration:

- vmware-io analyzer running on local disk

- FreeNAS presented as NFS datastore

- Added 50GB disk on the VM (on a different SCSI controller, 1:0) in the target Storage Subsystem to test

- Block Size are all 4K

- All tests are Random to simulate (at least) the IO Blender effect

- Tests were done for 30 minutes

- vSphere 5.5 U2

Baseline Benchmark using Synology DS412+

IOPs

Configuration

297.63

4k_50%Read_100%Random

127.94

4k_0read_100rand

1631.95

4k_100read_100rand

1646.21

4k_70%Read_100%Random

FreeNAS Benchmark

1st Config: 4 x 450GB as RAIDZ with SLOG (1 x 64GB SSD) –

IOPs

Configuration

2346.06

4k_50%Read_100%Random

531.58

4k_0read_100rand

774.4

4k_100read_100rand

3384.37

4k_70%Read_100%Random

Comment: RAIDZ is like RAID5 in ZFS world with a little tweaking. Off the bat, the configuration is already faster that my previous setup except for the 100% Read Test. After some reading, I found out that in ZFS, the READ is always equal to 1 disk in a single vDEV. For this test, since it’s a RAIDZ – the read equals to 1 x 450GB.

2nd Config: 4 x 450GB as RAID10 with NO SLOG

For this test, I wanted to improve the 100% Read test so I opted to use RAID10. This will create 2 vDEV which effectively would make my READs for equal to 2 disks. Also, I wanted to see the effect of not using a SLOG so I didn’t add it for this particular test.

IOPs

Configuration

1692.28

4k_50%Read_100%Random

643.05

4k_0read_100rand

2518.75

4k_100read_100rand

1068.68

4k_70%Read_100%Random

Comment: Ok. That surely improved my 100% Read tests but the 100% write test didn’t improve because of the lack of SLOG device. The Log writes are consuming IOPs on the pool. hmm. Now let’s try with a SLOG device.

3rd Config: 4 x 450GB as RAID10 with SLOG (1 x 64GB SSD)

IOPs

Configuration

4102.94

4k_50%Read_100%Random

1223.79

4k_0read_100rand

1916.41

4k_100read_100rand

2174.81

4k_70%Read_100%Random

Comment: Weee..consistent 1K+ IOPs. You can clearly see the effect of adding a SLOG greatly affects the performance of the Pool.

Conclusion:

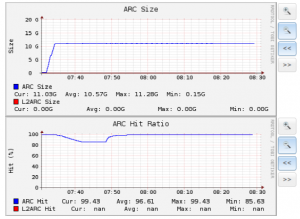

FreeNAS using ZFS is a good Open Storage solution given you size the hardware properly. The effect of using choosing the correct RAID configuration and the SLOG for your workload is crucial to have the correct performance expectation. Although for the test, you can argue that most of the writes may have been cached by the ARC (different discussion) – which it did, but i guess thats the whole purpose of using ZFS.

Optional Configuration:

Since SLOG writes are continuous, it is best to use an SLC SSD for it’s purpose. Since I don’t have an SLC, I had to underprovision my MLC SSD to improve wearing on the device. For Intel and Samsung, they have a utility for doing this. Unfortunately, for Crucial SSD- there is no available tool for doing this so i had to manually do this from the server side. This is done by doing the following:

1. Secure delete the SSD (ensuring all ZERO’s are written)

2. SSH to the freenas server

3. Invoke camcontrol devlist to determine which device is the SSD

4. gpart create -s GPT ada0 (assuming it’s ada0)

5. gpart add -t freebsd-zfs -a 4k -s 8G ada0

6. zpool add YOURPOOLNAME log ada0p1

Thank you for your sharing. I am worried that I lack creative ideas. It is your article that makes me full of hope. Thank you. But, I have a question, can you help me? https://www.binance.info/en-NG/register?ref=YY80CKRN

Your point of view caught my eye and was very interesting. Thanks. I have a question for you. https://accounts.binance.info/es/register-person?ref=RQUR4BEO