NOTE: This post is based on Tanzu Kubernetes Grid. If you are using other kubernetes release, installing/using AVI Kubernetes Operator manually should work.

With the release of Tanzu Kubernetes Grid ( tkg 1.3), Avi Kubernetes Operator can be pre-configured as part of kubernetes cluster creation. This helps streamline setting up Type:LoadBalancer especially for on-prem kubernetes install. This is HUGE as it removes the complexities normally attributed in exposing your kubernetes services from the outside. Normally, for on-prem install, the easiest option is to use metallb which works but is not recommended for production. Now, with AVI, we get to have production-grade load-balancer for on-premise which natively integrates with kubernetes!

With this post, i’ll try to list high-level steps on how to setup AVI with TKG 1.3.

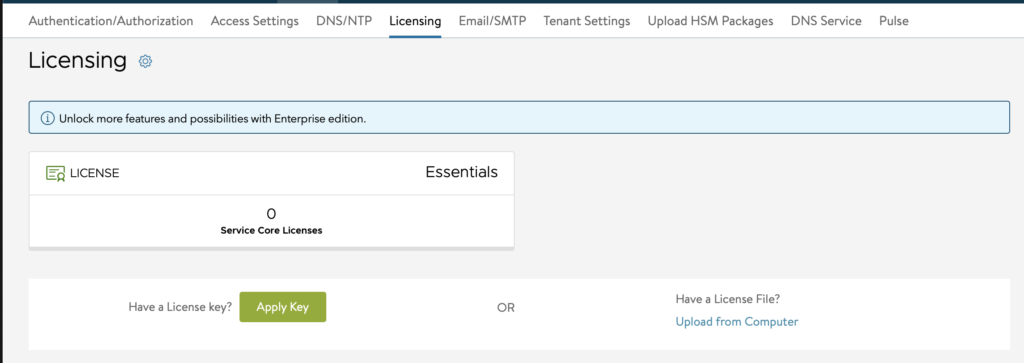

For AVI, it will be utilizing Essentials license which has features enough to provide LoadBalancer for kubernetes. To compare AVI edition features, refer to this link: https://avinetworks.com/docs/20.1/nsx-license-editions/ | https://avi.ldc.int/src/assets/docs/license-tiers-comparison.pdf

Preparing AVI Manager

- Deploy OVA, go through the usual initial configuration. I won’t go over this step as its straightforward

- Once Avi Manager is up, switch the license to Essentials. By default, it installs an enterprise trial license which we don’t need for this setup.

- Go to Administration -> Settings -> Licensing. Click the COG and change to Essentials

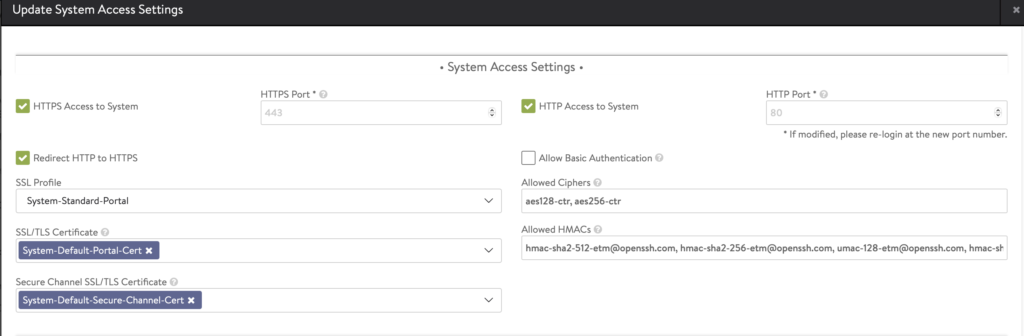

- Setup AVI portal certificate

- Go to Administration -> Settings -> Access Settings. Make sure theres only ONE certificate in SSL/TLS Certificate portion. This will be used by AKO to connect to AVI portal.

- Take note of the certificate

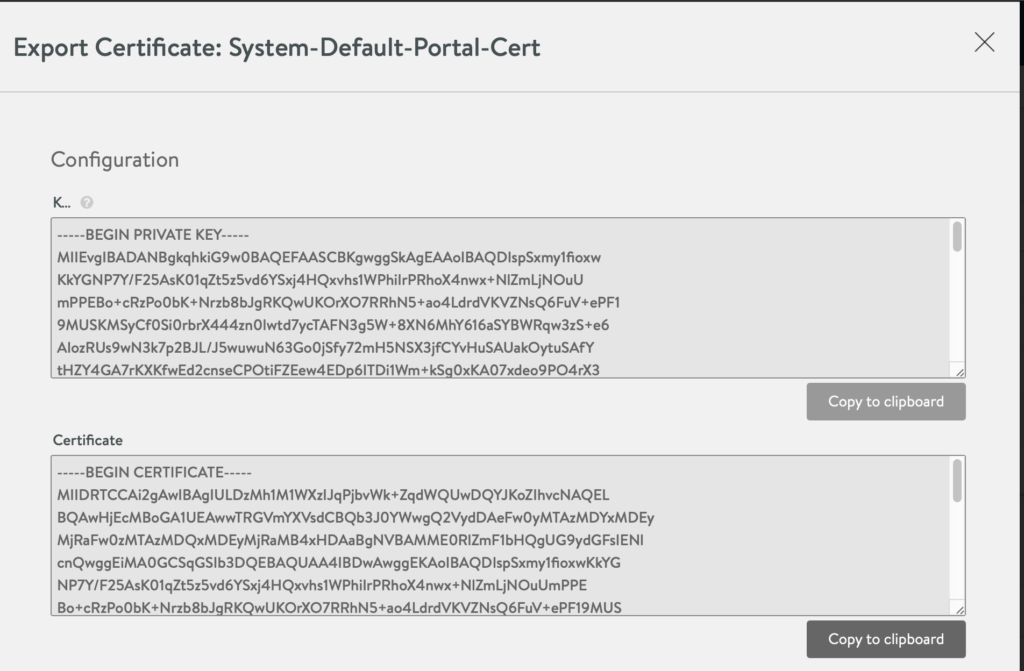

- Go to Templates -> Security -> SSL/TLS Certificate. Copy the certificate you specified in the previous step. (CLICK the DOWNLOAD/ arrow button in the table). This will be needed during the TKG setup.

- Keep this for later usage.

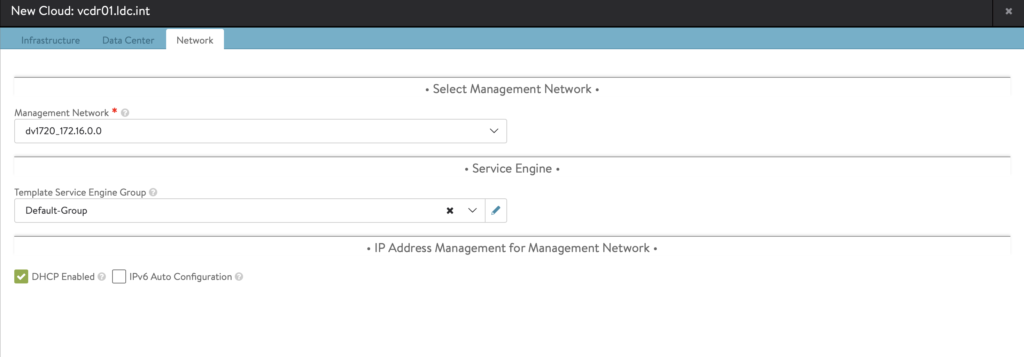

- Setup vSphere Cloud

- Go to Infrastructure -> Cloud -> Create. Select VMware vCenter/ ESX

- Next steps are straight forward.

- Just take note of the following:

- Keep everything by default

- Select the default IPAM in the drop down. IMPORTANT

- In the Step 4: Network, select the dvPortGroup where the Management will be placed. For my setup, I chose a normal VLAN and it’s running DHCP so I selected DHCP enabled network.

- Setup the Network where LoadBalancer VIP will be placed

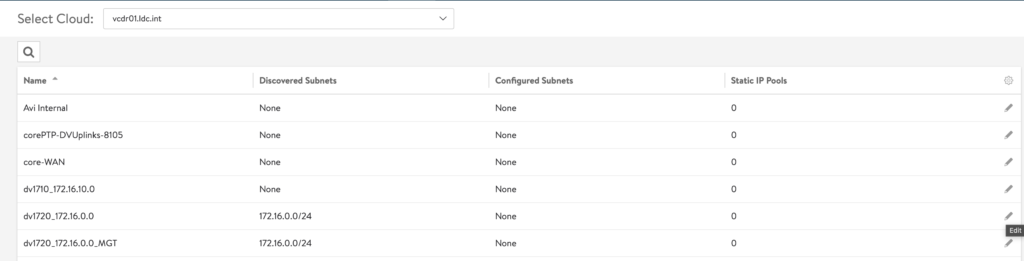

- Go to Infrastructure -> Networks. In the Drop-Down, select the vCenter cloud created previously.

- You’ll get a list of dvPortGroups. Select the one where you’ll place the VIP and Click Edit

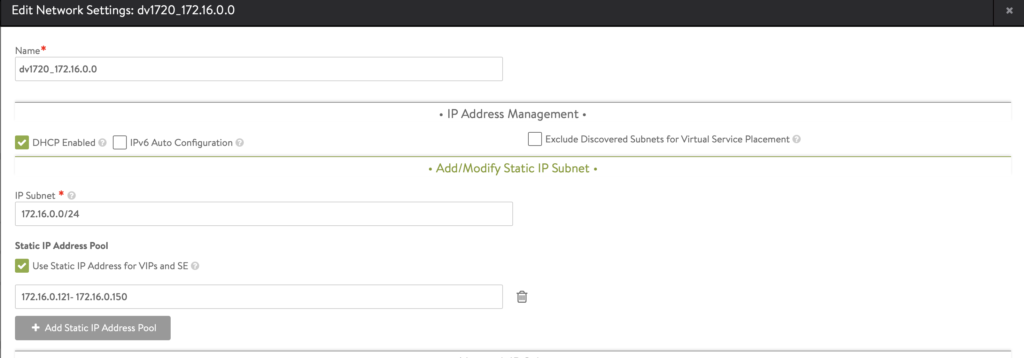

- In the Edit Page:

- Click Add Subnet

- Supply the IP/Subnet for the network in CIDR format

- Add Static IP Address Pool

- This where IP address for Type:LoaBalancer will be picked

- For my setup, I used the same Network for both Management and VIP setup.

- Management will be allocated using DHCP

- VIP address pool is explicitly specified outside of the DHCP range

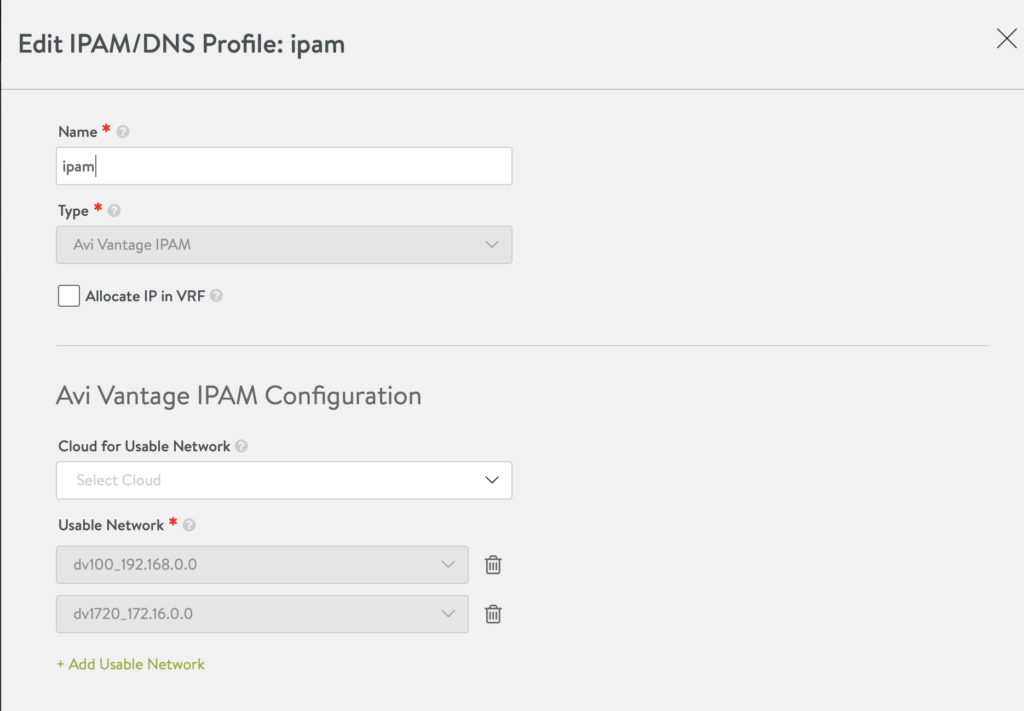

Setup IPAM

Once you have everything configured, you need to tell AVI to “keep track” of the IP addresses it will be issuing to the different type:LoadBalancer. To do this:

- In AVI Manager, go to Templates -> Profiles -> IPAM/DNS Profile

- Edit the default IPAM and add the same dvPortGroup you selected in the previous step.

- NOTE: Make sure the same IPAM Profile is configured on the cloud (the part where you connect the vCenter) you’ll be using.

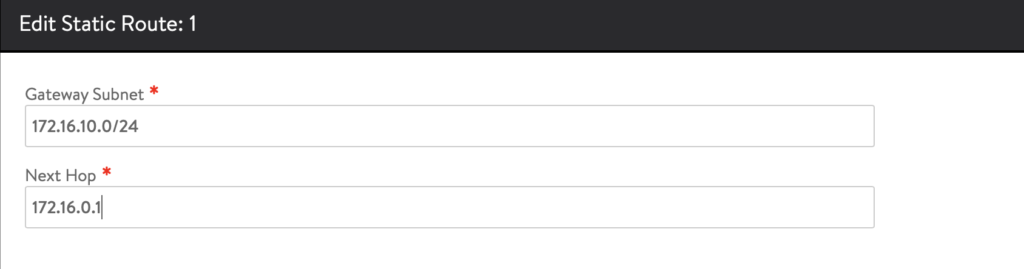

Setting up routes from Service Engine to AKO

In my setup, I’ve deployed the VIP and k8s node network on two separate subnet. You need to explicitly define in AVI how to reach the k8s node network. This is done by doing the following:

- Go to Infrastructure -> Routing, add a new Static Route

- In my setup I had the following:

- VIP is at : 172.16.0.0/24

- K8s Node is at: 172.16.10.0/24

- Hence, the static route is: 172.16.10.0/24 via 172.16.0.1 which translates to “go to this IP if you want to reach that k8s network”

- In my setup I had the following:

Installing TKG 1.3

I’ve covered this part in detail before , the only change for TKG 1.3 installation are the following:

- tkg cli is no more. It’s replaced using tanzu command. To initiate UI install, issue the following command:

tanzu management-cluster create --ui

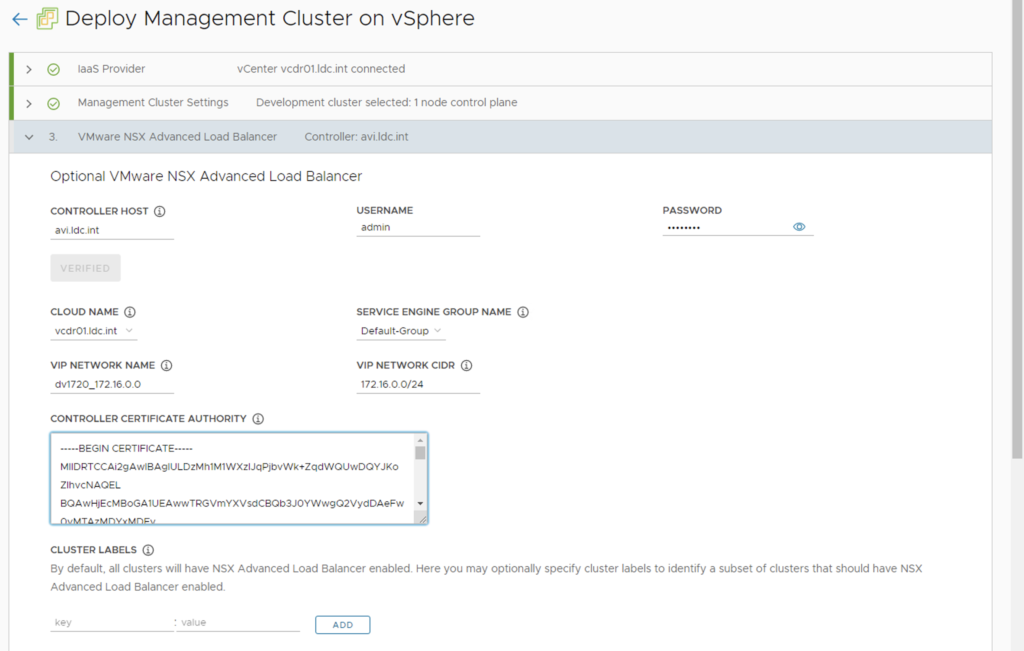

- In the wizard, theres a new NSX Advanced Load Balancer section, fill-out the following based on the previous steps:

- Controller Host: AVI controller manager

- Cloud Name : cloud name you previously setup

- Service Engine Group: Service Engine group where

- VIP Network Name: dvPortGroup Name with which you setup the static IP pool in the previous step

- VIP Network CIDR: specify the CIDR for this network

- Controller Certificate Authority: specify the certificate the portal is using. This was done in the previous step.

- NOTE: Keep cluster label empty unless you want to enable “Load Balancing” for specific workload cluster only. This is done by specifying this portion to have a key-value pair and having the same key-pair when you create the workload cluster.

- There’s a new portion to setup OIDC/ Authentication – we skip this for now on this post.

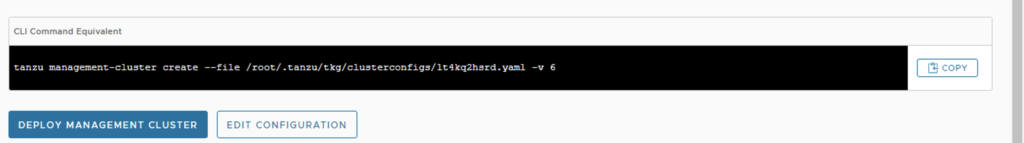

- After the configuration, make sure to create a COPY of the resulting configuration file. We will be using this as a basis to create workload cluster later.

Install TKG Workload Cluster

Once TKG Management cluster is created, it’s time to create the TKG workload cluster. This is done using the same configuration file that was used to create the management cluster with the following changes in the file:

# specify the cluster name for the new kubernetes cluster

CLUSTER_NAME: tkg-prod-pvt

# specify the FQDN/ IP that will be used by the control plane. This is also how kubectl will reach this cluster

VSPHERE_CONTROL_PLANE_ENDPOINT: tkg-prod-pvt.ldc.int

# customize the config depending on the need. For this case, i opted to increase the memory and number of worker nodes.

VSPHERE_WORKER_MEM_MIB: "8192"

WORKER_MACHINE_COUNT: 3

Initiate install using the following command

tanzu cluster create <CLUSTER NAME> --file FILENAME.yaml

After installation, you can get the kubeconfig to connect to the workload cluster by doing the following:

# if you have multiple tkg management cluster, issue the following:

# tanzu login

tanzu cluster kubeconfig get <CLUSTER NAME> --admin --export-file <FILENAME to export to>

Verify Avi Kubernetes Operator

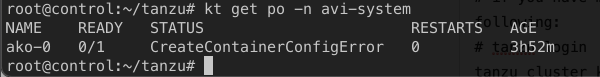

Issue the following:

kubectl get po -n avi-system

This is expected as it’s looking for avi-secret to be able to login Avi Manager.

UPDATE 1: This is not expected. My environment was not properly configured (it cannot lookup the Avi Manager due to DNS issue) hence the error. Normally, avi-secret should be loaded automatically.

UPDATE 2: I got confirmation that it may be due to unsupported AVI i was using. TKG 1.3 is supported for AVI 20.1.3. I was using 20.1.4 on this post.

UPDATE 3-FINAL: This is because of invalid self-signed certificate in AVI. One way to have it correctly create the secret during Cluster creation is to make sure the Hostname is both in the CN and SAN of the AVI Manager certificate. Basically, after install of AVI, change the default certificate to include the HOSTNAME on both SAN and CN part. Instruction here: https://avinetworks.com/docs/latest/how-to-change-the-default-portal-certificate/#:~:text=Once%20the%20certificate%20is%20successfully,Click%20Save.

For my issue – to resolve it, create the appropriate secret object.

kubectl create secret generic avi-secret --from-literal=username=admin --from-literal=password=<AVI Manager password> -n avi-system

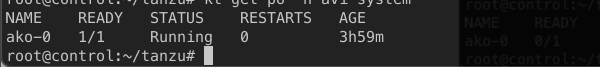

After which, the pod should now be running.

Testing the type:LoadBalancer

Here’s a sample application that uses type:LoadBalancer, submit it against the workload cluster.

apiVersion: v1

kind: Service

metadata:

name: lb-svc

spec:

selector:

app: lb-svc

ports:

- protocol: TCP

port: 80

targetPort: 80

type: LoadBalancer

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: lb-svc

spec:

replicas: 2

selector:

matchLabels:

app: lb-svc

template:

metadata:

labels:

app: lb-svc

spec:

serviceAccountName: default

containers:

- name: nginx

image: gcr.io/kubernetes-development-244305/nginx:latest

After submitting, you’ll notice Service Engine appliances will automatically be deployed to the vCenter. The configuration is based on the SE Default Group configuration for that cloud which is by default – will deploy 2 x SE in Active/Standby mode.

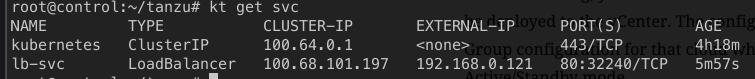

After a couple of minutes, IP for the LoadBalancer should be visible.

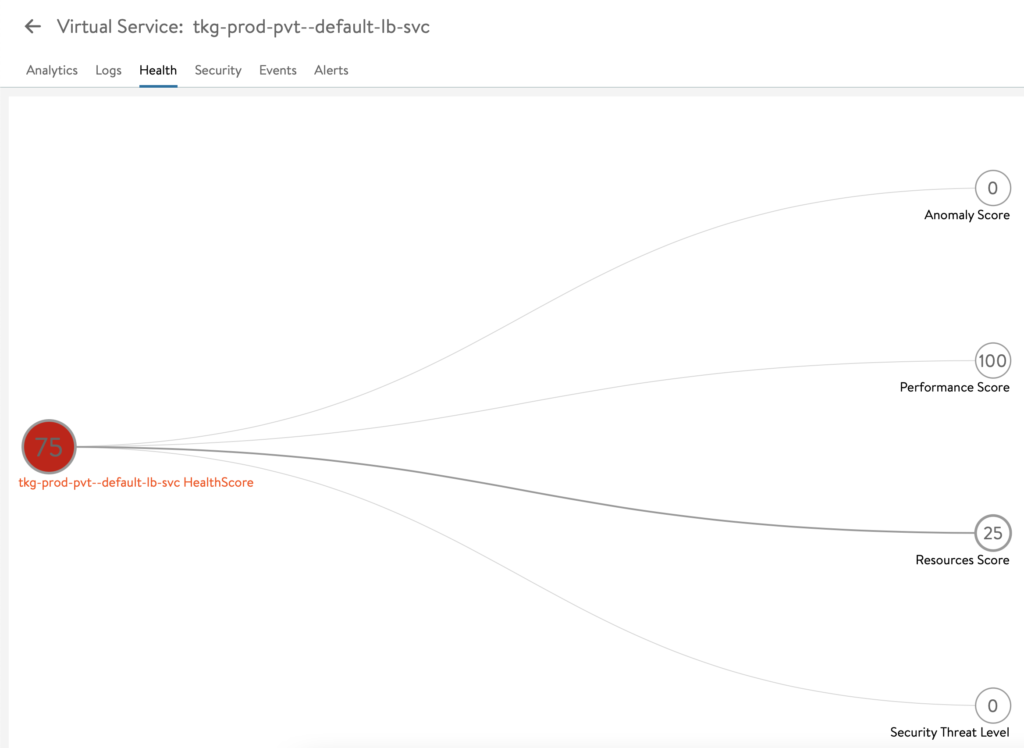

The beauty of using AVI, is the full metrics and monitoring out-of-the box.

That’s it. Enjoy and hope that helps 🙂

Pingback: Use AVI LB with Tanzu Kubernetes Grid 1.3

This is a fantastic post. The part about the Avi secret, we had the exact same problem. How do you know this is a DNS resolving issue?

We worked around the issue in the exact way you prescribed.

I got confirmation on the issue on this one.

It’s because the self-signed certificate of the AVI Manager is not properly format.

The hostname/FQDN of the AVI Manager should be both in the CN and SAN portion of the certificate.

YES!! WE had this exact issue today.

Are we sure that this is DNS tho? I don’t see how re-creating the secret would solve a DNS resolution problem.

In my case, I suspected that it was just a misconfigured Avi password…. unless somehow re-creating the secret changes how AKO is trying to reach your Avi controller.

I got confirmation on the issue on this one.

It’s because the self-signed certificate of the AVI Manager is not properly format.

The hostname/FQDN of the AVI Manager should be both in the CN and SAN portion of the certificate.