If you’re reading this – you either:

- sweating as you can’t recover a data from a POD that was using a PV

- or.. looking for ways to safely delete PODs without affecting storage stored in a PV

Either way, came across the same dilemma while I was migrating my apps to argocd. Took awhile to search for this so I’m documenting for anyone wanted to have the solution. If the PV is already released, skip to number 3

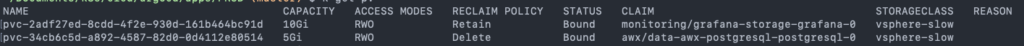

- First things first, make sure the PersistentVolume ReclaimPolicy is set to Retain. If it’s currently set to delete, you can easily patch the PV by issuing:

kubectl patch pv <your-pv-name> -p '{"spec":{"persistentVolumeReclaimPolicy":"Retain"}}'

2. Next, proceed to delete the resources that was using it. The status of the PV will then become Release after the PVC/POD holding it is now deleted.

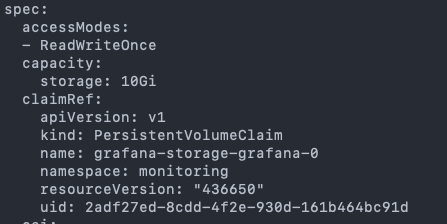

3. In a released state, edit the PV and remove spec.claimRef block

kubectl edit pv <pv name>

4. After removing the spec.claimRef block, the PV will be available. Proceed in re-adding your application

Hope that helps.

Enjoy!

NOTE: Other option is to clone the volume (depends on the storage provider):

https://kubernetes.io/blog/2019/06/21/introducing-volume-cloning-alpha-for-kubernetes/

CooperTNEl Paso

Pingback: 「Kubernetes」- 使用存储(学习笔记) - BLOG.K4NZ.COM

Thanks for the eye opener.

Say I was running a MariaDB-Galera statefulset and I then cleared my cluster and rebuilt it. Is it then possible to re-create the Galera cluster and have it re-use the PVC that’s still present on the NFS server? I plan on experimenting – especially now that I’m aware you can patch the PV – but thought I’d ask anyway as you may have already performed this experiment before.

Cheers,

ak.

i think as long as the PV is released and you reference the correct PVC to re-use it. you should be good.

One thing to note is, make sure the PV is used just for data of the DB. Depending on how the container/pod was using the mount, double check if it’s doing anything weird (like reinitialising the disk)

Thanks for getting back.

I actually experimented with this in my cluster and was able to get the cluster to reuse as you showed above. However, I was hoping we could use proper names to avoid having to edit out the ClaimRef. For instance, I have services that use a manual storage class and since I give them names that don’t have random characters, I do not need to edit out the claim reference as I can delete the link programmatically. I’ll have to see if I can play about with the Helm values to that end but that’s an exercise for a much later time.

Thanks again.

Pingback: 「Kubernetes」- 存储、数据持久化(学习笔记) - K4NZ BLOG

Pingback: 「Kubernetes」- PersistentVolume, PersistentVolumeClaim - K4NZ BLOG

@Gubi

Above procedure helped me to recover the PV from released to available.

Thank you for sharing the procedure.