NOTE: This post is based on Tanzu Kubernetes Grid. If you are using other kubernetes release, installing/using AVI Kubernetes Operator manually should work.

With the release of Tanzu Kubernetes Grid ( tkg 1.3), Avi Kubernetes Operator can be pre-configured as part of kubernetes cluster creation. This helps streamline setting up Type:LoadBalancer especially for on-prem kubernetes install. This is HUGE as it removes the complexities normally attributed in exposing your kubernetes services from the outside. Normally, for on-prem install, the easiest option is to use metallb which works but is not recommended for production. Now, with AVI, we get to have production-grade load-balancer for on-premise which natively integrates with kubernetes!

With this post, i’ll try to list high-level steps on how to setup AVI with TKG 1.3.

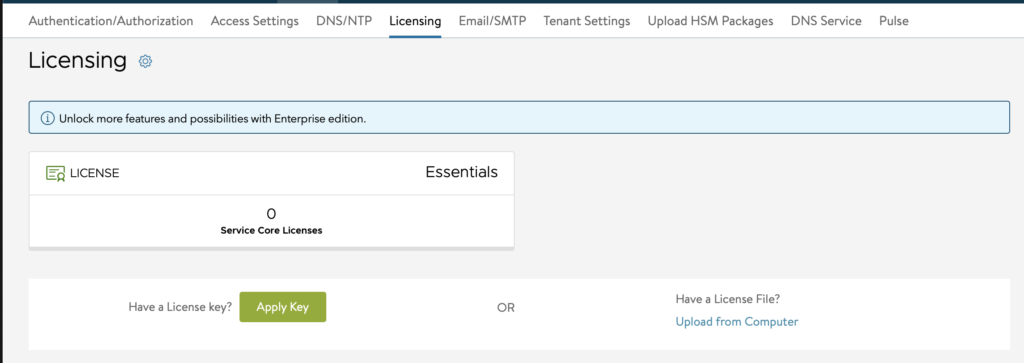

For AVI, it will be utilizing Essentials license which has features enough to provide LoadBalancer for kubernetes. To compare AVI edition features, refer to this link: https://avinetworks.com/docs/20.1/nsx-license-editions/ | https://avi.ldc.int/src/assets/docs/license-tiers-comparison.pdf

Preparing AVI Manager

- Deploy OVA, go through the usual initial configuration. I won’t go over this step as its straightforward

- Once Avi Manager is up, switch the license to Essentials. By default, it installs an enterprise trial license which we don’t need for this setup.

- Go to Administration -> Settings -> Licensing. Click the COG and change to Essentials

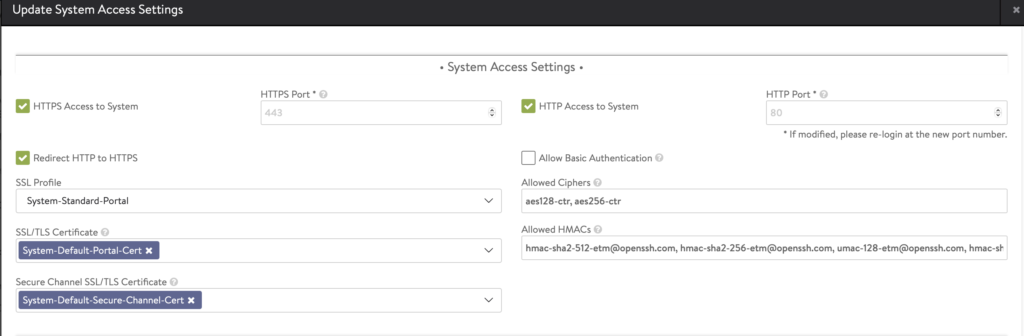

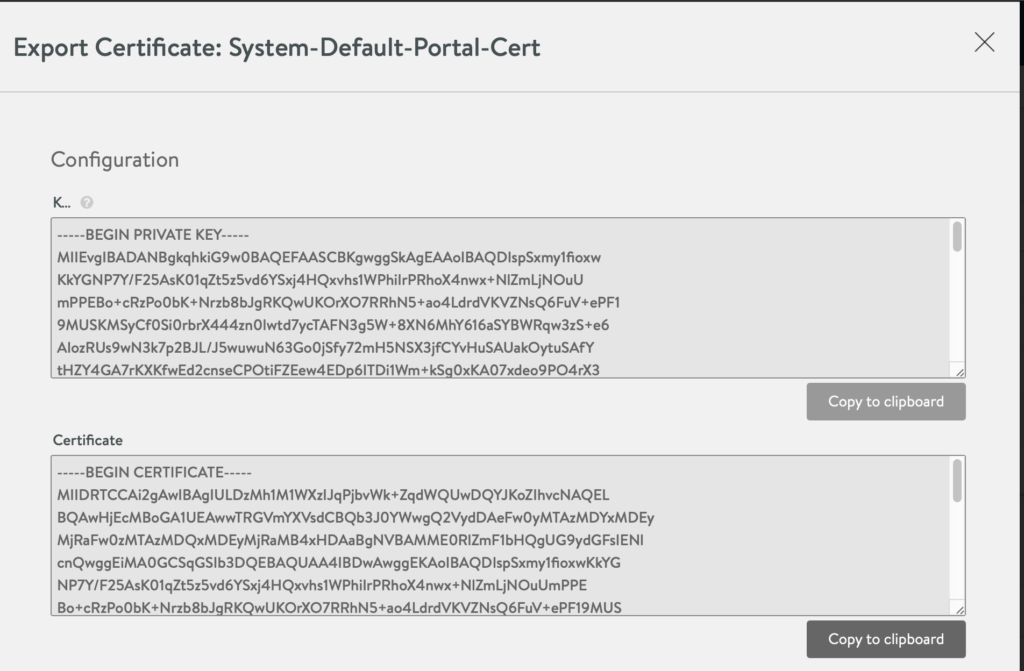

- Setup AVI portal certificate

- Go to Administration -> Settings -> Access Settings. Make sure theres only ONE certificate in SSL/TLS Certificate portion. This will be used by AKO to connect to AVI portal.

- Take note of the certificate

- Go to Templates -> Security -> SSL/TLS Certificate. Copy the certificate you specified in the previous step. (CLICK the DOWNLOAD/ arrow button in the table). This will be needed during the TKG setup.

- Keep this for later usage.

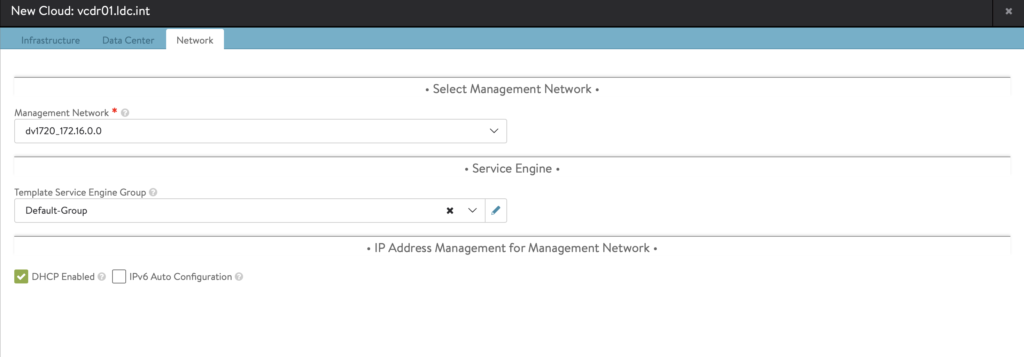

- Setup vSphere Cloud

- Go to Infrastructure -> Cloud -> Create. Select VMware vCenter/ ESX

- Next steps are straight forward.

- Just take note of the following:

- Keep everything by default

- Select the default IPAM in the drop down. IMPORTANT

- In the Step 4: Network, select the dvPortGroup where the Management will be placed. For my setup, I chose a normal VLAN and it’s running DHCP so I selected DHCP enabled network.

- Setup the Network where LoadBalancer VIP will be placed

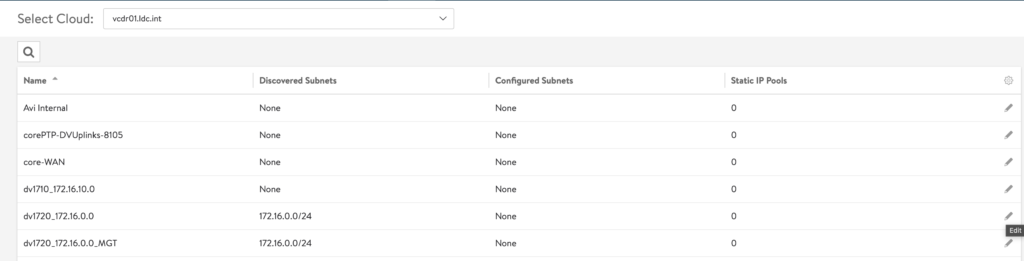

- Go to Infrastructure -> Networks. In the Drop-Down, select the vCenter cloud created previously.

- You’ll get a list of dvPortGroups. Select the one where you’ll place the VIP and Click Edit

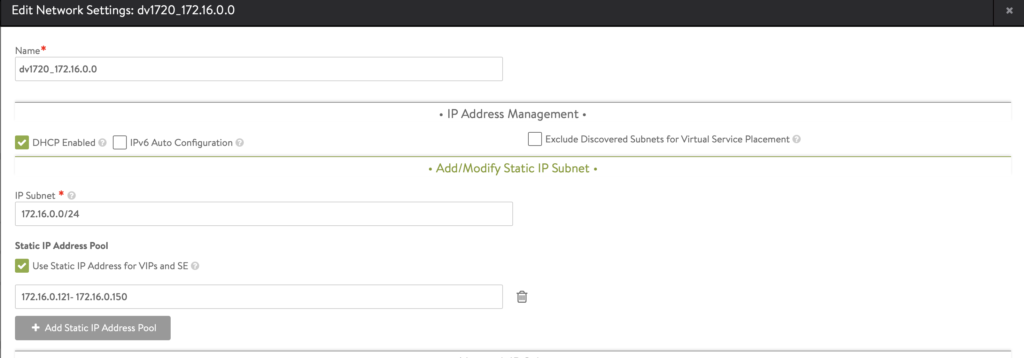

- In the Edit Page:

- Click Add Subnet

- Supply the IP/Subnet for the network in CIDR format

- Add Static IP Address Pool

- This where IP address for Type:LoaBalancer will be picked

- For my setup, I used the same Network for both Management and VIP setup.

- Management will be allocated using DHCP

- VIP address pool is explicitly specified outside of the DHCP range

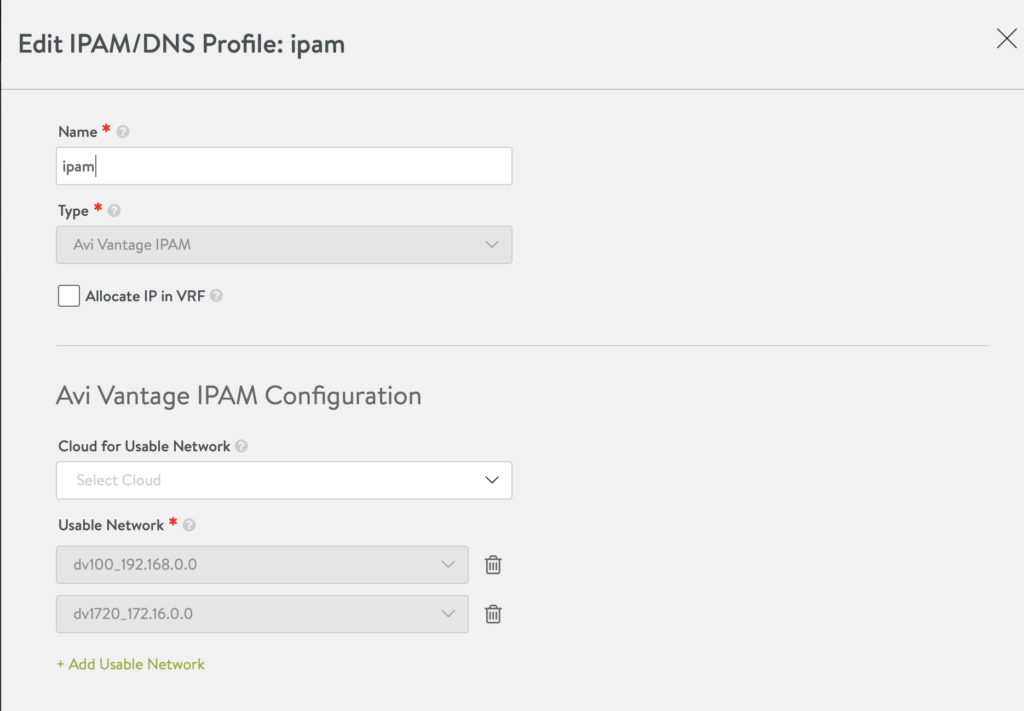

Setup IPAM

Once you have everything configured, you need to tell AVI to “keep track” of the IP addresses it will be issuing to the different type:LoadBalancer. To do this:

- In AVI Manager, go to Templates -> Profiles -> IPAM/DNS Profile

- Edit the default IPAM and add the same dvPortGroup you selected in the previous step.

- NOTE: Make sure the same IPAM Profile is configured on the cloud (the part where you connect the vCenter) you’ll be using.

Setting up routes from Service Engine to AKO

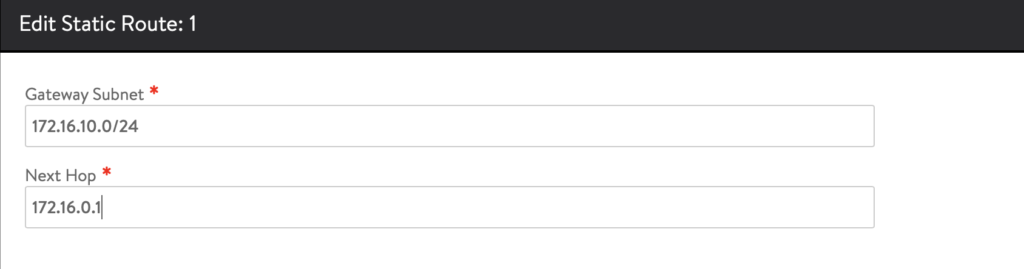

In my setup, I’ve deployed the VIP and k8s node network on two separate subnet. You need to explicitly define in AVI how to reach the k8s node network. This is done by doing the following:

- Go to Infrastructure -> Routing, add a new Static Route

- In my setup I had the following:

- VIP is at : 172.16.0.0/24

- K8s Node is at: 172.16.10.0/24

- Hence, the static route is: 172.16.10.0/24 via 172.16.0.1 which translates to “go to this IP if you want to reach that k8s network”

- In my setup I had the following:

Installing TKG 1.3

I’ve covered this part in detail before , the only change for TKG 1.3 installation are the following:

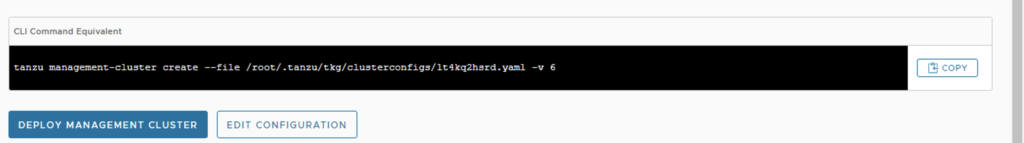

- tkg cli is no more. It’s replaced using tanzu command. To initiate UI install, issue the following command:

tanzu management-cluster create --ui

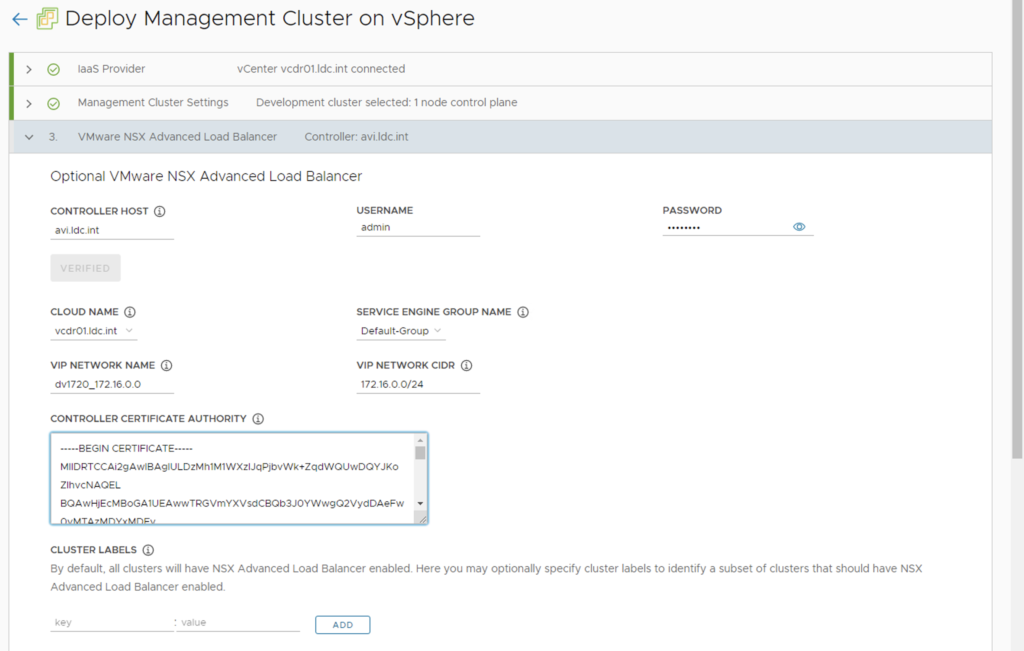

- In the wizard, theres a new NSX Advanced Load Balancer section, fill-out the following based on the previous steps:

- Controller Host: AVI controller manager

- Cloud Name : cloud name you previously setup

- Service Engine Group: Service Engine group where

- VIP Network Name: dvPortGroup Name with which you setup the static IP pool in the previous step

- VIP Network CIDR: specify the CIDR for this network

- Controller Certificate Authority: specify the certificate the portal is using. This was done in the previous step.

- NOTE: Keep cluster label empty unless you want to enable “Load Balancing” for specific workload cluster only. This is done by specifying this portion to have a key-value pair and having the same key-pair when you create the workload cluster.

- There’s a new portion to setup OIDC/ Authentication – we skip this for now on this post.

- After the configuration, make sure to create a COPY of the resulting configuration file. We will be using this as a basis to create workload cluster later.

Install TKG Workload Cluster

Once TKG Management cluster is created, it’s time to create the TKG workload cluster. This is done using the same configuration file that was used to create the management cluster with the following changes in the file:

# specify the cluster name for the new kubernetes cluster

CLUSTER_NAME: tkg-prod-pvt

# specify the FQDN/ IP that will be used by the control plane. This is also how kubectl will reach this cluster

VSPHERE_CONTROL_PLANE_ENDPOINT: tkg-prod-pvt.ldc.int

# customize the config depending on the need. For this case, i opted to increase the memory and number of worker nodes.

VSPHERE_WORKER_MEM_MIB: "8192"

WORKER_MACHINE_COUNT: 3

Initiate install using the following command

tanzu cluster create <CLUSTER NAME> --file FILENAME.yaml

After installation, you can get the kubeconfig to connect to the workload cluster by doing the following:

# if you have multiple tkg management cluster, issue the following:

# tanzu login

tanzu cluster kubeconfig get <CLUSTER NAME> --admin --export-file <FILENAME to export to>

Verify Avi Kubernetes Operator

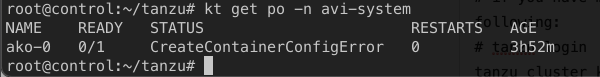

Issue the following:

kubectl get po -n avi-system

This is expected as it’s looking for avi-secret to be able to login Avi Manager.

UPDATE 1: This is not expected. My environment was not properly configured (it cannot lookup the Avi Manager due to DNS issue) hence the error. Normally, avi-secret should be loaded automatically.

UPDATE 2: I got confirmation that it may be due to unsupported AVI i was using. TKG 1.3 is supported for AVI 20.1.3. I was using 20.1.4 on this post.

UPDATE 3-FINAL: This is because of invalid self-signed certificate in AVI. One way to have it correctly create the secret during Cluster creation is to make sure the Hostname is both in the CN and SAN of the AVI Manager certificate. Basically, after install of AVI, change the default certificate to include the HOSTNAME on both SAN and CN part. Instruction here: https://avinetworks.com/docs/latest/how-to-change-the-default-portal-certificate/#:~:text=Once%20the%20certificate%20is%20successfully,Click%20Save.

For my issue – to resolve it, create the appropriate secret object.

kubectl create secret generic avi-secret --from-literal=username=admin --from-literal=password=<AVI Manager password> -n avi-system

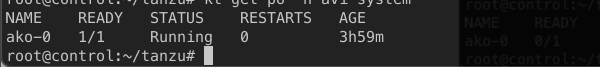

After which, the pod should now be running.

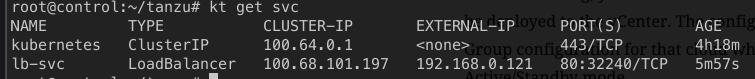

Testing the type:LoadBalancer

Here’s a sample application that uses type:LoadBalancer, submit it against the workload cluster.

apiVersion: v1

kind: Service

metadata:

name: lb-svc

spec:

selector:

app: lb-svc

ports:

- protocol: TCP

port: 80

targetPort: 80

type: LoadBalancer

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: lb-svc

spec:

replicas: 2

selector:

matchLabels:

app: lb-svc

template:

metadata:

labels:

app: lb-svc

spec:

serviceAccountName: default

containers:

- name: nginx

image: gcr.io/kubernetes-development-244305/nginx:latest

After submitting, you’ll notice Service Engine appliances will automatically be deployed to the vCenter. The configuration is based on the SE Default Group configuration for that cloud which is by default – will deploy 2 x SE in Active/Standby mode.

After a couple of minutes, IP for the LoadBalancer should be visible.

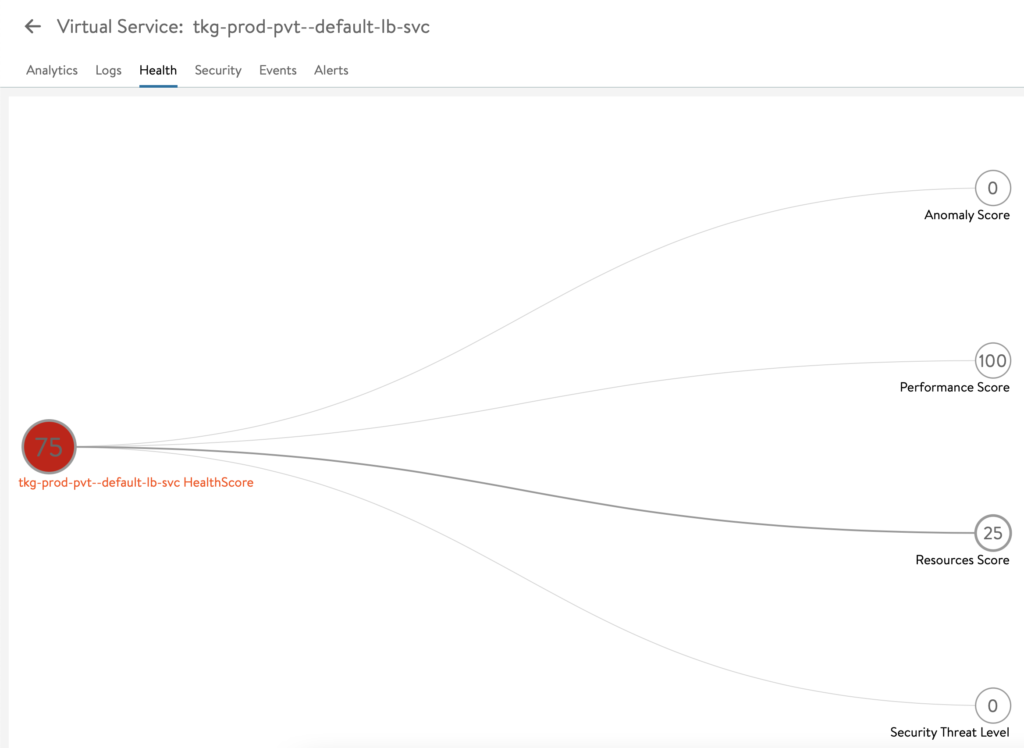

The beauty of using AVI, is the full metrics and monitoring out-of-the box.

That’s it. Enjoy and hope that helps 🙂

Pingback: Use AVI LB with Tanzu Kubernetes Grid 1.3

This is a fantastic post. The part about the Avi secret, we had the exact same problem. How do you know this is a DNS resolving issue?

We worked around the issue in the exact way you prescribed.

I got confirmation on the issue on this one.

It’s because the self-signed certificate of the AVI Manager is not properly format.

The hostname/FQDN of the AVI Manager should be both in the CN and SAN portion of the certificate.

YES!! WE had this exact issue today.

Are we sure that this is DNS tho? I don’t see how re-creating the secret would solve a DNS resolution problem.

In my case, I suspected that it was just a misconfigured Avi password…. unless somehow re-creating the secret changes how AKO is trying to reach your Avi controller.

I got confirmation on the issue on this one.

It’s because the self-signed certificate of the AVI Manager is not properly format.

The hostname/FQDN of the AVI Manager should be both in the CN and SAN portion of the certificate.

Промокод при регистрации в Mostbet сегодня. Этой акцией может воспользоваться каждый новый клиент при регистрации на сайте букмекера. Это ваше преимущество со старта, и у Mostbet есть эксклюзивное предложение. промокод годовщины. В форме регистрации есть всего одно необязательное поле — как раз для ввода промокода. Как получить промокод на бесплатную ставку. Магазин находится во вкладке xBonus (в перечне вкладок верхнего блока). Вам предложат несколько вариантов для обмена баллов на промокоды Mostbet kz. Код можно получить для ставки ординар, для ставки экспресс, а также для лотерей от «Мостбет». На сегодня промокод Mostbet бесплатно найти можно без особых затрат энергии, при этом сделать это следующим образом: Путем поиска в сети интернет; На сайтах, специализирующихся на спортивных ставках (Не ресурсы БК); В официальных сообществах Мостбет (соцсети). Представители БК часто выкладывают в группах или же просто в сети промокоды на Mostbet бесплатно в честь какого-то праздника либо же грядущего крупного спортивного турнира. Все промокоды Mostbet 2025. На официальном сайте букмекера в соответствующей рубрике находится полный перечень промокодов Mostbet 2025, бесплатно получить которые можно обменяв на них бонусные баллы.

https://shorturl.fm/1MBse

Thank you for this interesting article! Very useful information.

Verification: VRF964FA2DF

https://shorturl.fm/vq10M

https://shorturl.fm/Pmcod

Официальный сайт букмекерской компании > http://www.autotat.ru/

https://shorturl.fm/l3mJb

https://shorturl.fm/b1nHt

Find the new look here > https://www.apelsin.su/

https://shorturl.fm/3XONh

https://shorturl.fm/mOV3X

https://shorturl.fm/Asdt3

https://shorturl.fm/5mSA1

rh19xy

Hello friends!

I came across a 146 valuable page that I think you should visit.

This resource is packed with a lot of useful information that you might find insightful.

It has everything you could possibly need, so be sure to give it a visit!

https://mynewsweb.net/basics-to-consider-for-a-perfect-cv/

Furthermore don’t overlook, everyone, that a person always are able to in the publication locate solutions to address the most the absolute confusing questions. Our team attempted — lay out all data via an most understandable manner.

https://shorturl.fm/nf4Z0

https://shorturl.fm/t7Sbk

https://shorturl.fm/6SklY

https://shorturl.fm/n8IFK

https://shorturl.fm/N8e8W

Nhờ giao diện hiện đại, bảo mật cao, cùng chiến lược toàn cầu hóa thông minh, 66b online không chỉ nổi bật mà còn trở thành biểu tượng mới của sự đẳng cấp và an toàn trong ngành cá cược trực tuyến.

https://shorturl.fm/O54i5

Hello !!

I came across a 147 great website that I think you should visit.

This platform is packed with a lot of useful information that you might find valuable.

It has everything you could possibly need, so be sure to give it a visit!

https://ieltsbands.com/big-data-science-changes-education/

And don’t neglect, guys, which a person at all times can within the article locate solutions to address the most the absolute complicated questions. We tried to explain all of the information using the very understandable method.

https://shorturl.fm/cVF5h

https://shorturl.fm/Y9SSH

Thank you for this interesting article! Very useful information.

Verification: VRF23635CBD

https://shorturl.fm/k7fpF

dbcwoe

https://shorturl.fm/d22XS

https://shorturl.fm/JzgIz

https://shorturl.fm/IC8KC

Activate the promo code today and receive free spins. Any client can activate the code with minimal deposit and immediately enjoy. You can find daily bonuses available today. Claim your bonus today to get special perks with mostbet promocode. mostbet promo code today mostbet bonus code

Great article! Thanks for sharing.

Ref: TEST-8274AA65

Great article! Thanks for sharing.

Ref: TEST-9AB20068

https://shorturl.fm/0t9Nk

Код на бонусы Мостбет позволяет забирать дополнительные привилегии. https://loginnet.ru/pages/promokod_mostbet_pri_registracii_na_segodnya.html Мостбет Промокод Без Депозита

Промокод 1xbet для TJ ориентирован на игроков из Таджикистана и часто дает особые предложения для региона. Для активации, смотрите локальную версию сайта и вводите код при регистрации или в личном кабинете. Этот комментарий при продвижении и локальной рекламе. http://vavto.su/img/pages/?promokod_567.html

Рабочий промокод Melbet активирует свежие бонусы включая депозитные бонусы, бесплатные ставки, фриспины и участие в специальных акциях. Игроки используют такие коды регулярно, поскольку платформа часто обновляет условия. Важно вводить код правильно, чтобы система его приняла. https://grktmn.ru/includes/pages/melbet_promokod_pri_registracii_na_segodnya_2.html

Введите свежий 1xslots промокод, чтобы получить дополнительные возможности в игре 1xslots промокод 1xslots промокод

Im Lesetext erfahre ich, dass Tape Art nicht nur Aufmerksamkeit erzeugt, sondern auch die Conversion‑Rate um bis zu zehn Prozent steigern kann, wenn das Design gezielt auf die Markenfarben abgestimmt wird. Die vorgestellten Fallstudien aus verschiedenen Branchen sind sehr inspirierend. Schau dir das mal an: https://pphr9sc56f4j4s.notepin.co/tape-art-im-unternehmensmarketing–berblicktape-art-im-unternehmensmarketing-steigern-sie-markenrecall-um-30–lftmzwwb E936CB

Hey Leute, ich bin gerade über den Beitrag bei Selfmade Crew gestolpert und muss sagen, er liefert klare Tipps, wie man seine Produktivität im Home‑Office steigert. Besonders die Praxisbeispiele haben mir geholfen, sofort etwas umzusetzen. Schaut euch das unbedingt an: https://shrib.com/?v=md#promo-u5sugbfy 999242

Hey, ich bin gerade auf einen interessanten Beitrag gestoßen, der erklärt, wie man seine Produktivität im Home‑Office steigert. Besonders die Methode mit kurzen Fokus‑Intervallen hat mir geholfen, Ablenkungen zu reduzieren. Wer mehr darüber wissen will, sollte unbedingt den Artikel lesen (https://ideone.com/sO8b8N). 51191D

https://shorturl.fm/7irjb

Im PDF werden mehrere Gründerinterviews präsentiert, die zeigen, wie man Hindernisse überwindet. Besonders die Geschichte von Anna K. inspirierte mich, meine Angst vor dem ersten Kundenkontakt zu verlieren. Diese Praxisbeispiele geben echten Mut – hier geht’s zum Dokument: https://files.catbox.moe/nwmziq.pdf und zeigen klare Handlungsoptionen.

Если вы устали от постоянных просадок, статья на proFXportal.com раскрывает простую систему управления капиталом, позволяющую сохранять спокойствие даже в волатильные периоды. Автор показывает, как рассчитывать размер позиции в зависимости от риска. Смотрите здесь https://controlc.com/3c1c8ae9 – советов достаточно, чтобы сразу увидеть результат. 53CD3F

Im Text wird gezeigt, wie man mit wenigen Metern Tape ein komplettes Corporate‑Design auf einer Messefläche realisieren kann. Die Schritt‑für‑Schritt‑Anleitung spart nicht nur Zeit, sondern reduziert auch die Kosten für teure Messestände um bis zu 30 %. Besonders hilfreich ist die Materialliste, die sofort umsetzbar ist. Für alle, die schnell und günstig Eindruck machen wollen, ein klarer Gewinn (https://telegra.ph/Tape-Art-Auftragsk%C3%BCnstler-f%C3%BCr-innovative-Unternehmensdesigns-und-Events–obzor-12-10). 2BCC35

Im aktuellen SelfmadeCrew‑Artikel wird ein einfacher SEO‑Check vorgestellt, der bereits kleine Webseiten nach vorne bringt. Die Anleitung erklärt, wie du Meta‑Tags, Überschriften und interne Verlinkungen optimierst, ohne ein Experte zu sein. Meine Besucherzahlen stiegen nach der Umsetzung um 20 %. Probier es aus: https://termbin.com/x5mo A1533B

Статья на proFXportal.com раскрывает ключевые ошибки новичков, такие как отсутствие стоп‑лосса и переоценка риска. После прочтения понял, как правильно ставить защитные ордера, что сразу сократило количество убыточных сделок. Ссылка https://shrib.com/?v=md#promo-v3dbnkgh ведёт прямо к материалу, где всё объяснено доступно. Теперь я увереннее подхожу к каждой сделке, учитывая реальные уровни риска. 50615A

Статья proFXportal.com раскрывает, как подобрать оптимальный таймфрейм в зависимости от стиля торговли, будь то скальпинг или позиционная. Один из ключевых выводов – использовать 4‑часовой график для подтверждения тренда перед входом на 15‑минутный. Это значительно повышает точность сигналов. https://write.as/msr06tdak817g.md Автор также приводит примеры реальных сделок, где такой подход сократил количество ложных входов вдвое. 0F57C9

Der Selfmadecrew‑Artikel liefert ein klares Bild, wie man einen einfachen Verkaufstrichter aufbaut, beginnend mit einem kostenlosen Lead‑Magneten bis hin zum Upsell. Das Schema ist praktisch und lässt sich in jeder Nische anwenden, wodurch man mehr Leads in Kunden verwandeln kann. Sieh dir das hier an: https://shrib.com/?v=md#promo-ofohea23 D2E829

Статья на proFXportal.com помогает избежать типичных ошибок новичков, например, неверного расчёта лота. Автор привёл пример, как правильно использовать калькулятор риска, что спасло меня от потери 5 % капитала за одну сделку. Такое руководство стоит прочитать каждому, кто хочет торговать безопасно. (https://ideone.com/rWx1eR) 999FB4

Der Artikel gibt einen ungewöhnlichen Trick: jeden Tag drei zufällige Wörter notieren und daraus eine Mini‑Story bauen. Das hat meine Kreativität angekurbelt und half bei Brainstormings. Probier es aus, indem du https://termbin.com/pf55 liest – du wirst überrascht sein und neue Ideen finden. DA886A

Der Beitrag gibt einen klaren Fahrplan, wie man potenzielle Kunden gezielt anspricht, ohne aufdringlich zu sein. Besonders die Technik, personalisierte Nachrichten mit einem kurzen Nutzenversprechen zu senden, hat meine Rücklaufquote verdoppelt. Alle Details dazu gibt es hier: https://controlc.com/4895c80e. 5CB30F

В статье подробно описаны две новые стратегии, основанные на индикаторе MACD и уровней Фибоначчи. Автор показывает, как комбинировать их для получения более надёжных сигналов, а также приводит примеры реальных сделок с результатами. Это отличный способ расширить арсенал инструментов. Обязательно ознакомьтесь, если хотите разнообразить свои методы. (https://pad.riseup.net/p/promo-s984izlt) 2642F8

Der Beitrag zeigt, warum ein Wachstums‑Mindset entscheidend ist und gibt praktische Übungen, um negative Glaubenssätze zu überwinden. Nachdem ich die täglichen Reflexionsfragen angewendet habe, fühle ich mich motivierter und sehe klarere Fortschritte in meinen Projekten. Das solltest du dir nicht entgehen lassen: https://paste2.org/0kMmOAvZ

Если ищете простой план действий, статья на proFXportal.com будет кстати. В ней собраны шаги от открытия счёта до первой сделки, а также список проверенных брокеров. Особенно ценным оказался раздел о выборе подходящего таймфрейма, который помогает сократить количество ложных сигналов. https://rentry.co/shf6pby8 8C620F

Im Beitrag wird gezeigt, wie Tape‑Art als visuelles Storytelling‑Tool eingesetzt werden kann. Ein Beispiel erklärt, wie man Unternehmenswerte in farbige Muster übersetzt, die dann bei Events als Wandgestaltung dienen. Das Ergebnis ist ein einprägsames, wiedererkennbares Bild, das Besucher begeistert. https://telegra.ph/Tape-Art-Auftragsk%C3%BCnstler-f%C3%BCr-innovative-Unternehmensdesigns-und-Events–obzor-12-10-3

В статье собран список бесплатных сервисов для анализа рынка, от экономических календарей до скринеров сигналов. Их интеграция в рабочий процесс позволяет быстро находить выгодные возможности и экономить время на ручной проверке данных. Попробуйте, и вы заметите улучшение эффективности. Каждый ресурс снабжен инструкциями по настройке, что упрощает старт даже без технического опыта. https://privatebin.net/?57c88f343be1287c#DpUkAQLXBkUHTSRcSG8DGbYkYRmLXZ4PoyKhXHKyYtMY 328601

Im Artikel werden drei konkrete Strategien für einen starken LinkedIn‑Auftritt erklärt, darunter das Optimieren des Profils, das gezielte Teilen von Fachartikeln und das aktive Kommentieren in relevanten Gruppen. Diese Maßnahmen haben meine Sichtbarkeit innerhalb eines Monats um 40 % erhöht. Sehr empfehlenswert für alle, die ihr Netzwerk ausbauen wollen. https://paste.rs/Acms6 7E7B08

Прочитал материал на proFXportal.com и понял, как важно вести журнал сделок. В статье подробно описаны шаги по систематическому анализу результатов, что помогает выявлять слабые места и повышать эффективность. Если хотите повысить точность прогнозов, стоит обратить внимание. Также автор делится рекомендациями по выбору таймфрейма, что упрощает процесс принятия решений. https://rentry.co/c9gpdmna

Der Text zeigt, wie man systematisch Kundenfeedback sammelt und in Produktverbesserungen umsetzt. Besonders das vorgestellte 3‑Stufen‑Modell (Sammeln, Analysieren, Handeln) hat mir geholfen, meine Servicequalität zu steigern. Wer seine Angebote kontinuierlich verbessern will, sollte das lesen: https://controlc.com/e1e7f48e. Damit bleibt die Kundenzufriedenheit langfristig hoch. E8EB14

Статья proFXportal.com раскрывает, как правильно сравнивать спреды разных брокеров и выбирать оптимальный вариант для конкретной стратегии. Благодаря этим рекомендациям я смог снизить издержки на 15 %, что сразу отразилось на чистой прибыли. Полный разбор доступен по ссылке https://pad.riseup.net/p/promo-nw1ydd3e – советую каждому. AE76F1

Das Dokument auf Selfmadecrew liefert ein überraschend simples System, um Meetings effizienter zu planen – die 15‑Minuten‑Regel und für jedes Team. Seit ich das anwende, sind meine Besprechungen kürzer und produktiver. Wer seine Zeit besser nutzen will, sollte das prüfen: https://files.catbox.moe/rla2wa.pdf A61698

Selfmadecrew hat einen spannenden Beitrag veröffentlicht, der die Brainstorm‑Methode „6‑3‑5“ erklärt. Mit dieser Technik entstehen in kurzer Zeit 108 Ideen, die sofort weiterentwickelt werden können. Ich habe sie im Team ausprobiert und wir haben ein neues Produktkonzept gefunden. Mehr dazu hier: https://justpaste.it/fwy28 6E20E9

Рекомендую к прочтению статью на proFXportal.com – в ней подробно разбираются стратегии управления рисками в форекс. Вы получите практический чек‑лист, который поможет снизить потери и повысить эффективность сделок. Автор также делится примерами реальных торговых ситуаций, показывая, как применять инструменты защиты капитала в реальном времени. Подробнее по ссылке (https://pphr9sc56f4j4s.notepin.co/hello————uwwyamnd).

PowerInsights reveals a handful of shortcut keys and browser settings that cut the CRS login time in half. The tip about saving your credentials securely was a game‑changer for me, letting me jump straight to the dashboard. No more endless password resets. (https://telegra.ph/JoinCRS-Online-Services-Guide-Simple-Steps-for-Easy-Access-12-11)

В статье proFXportal.com нашёл полезный раздел о психологических ловушках, в которые часто попадают трейдеры. Описаны способы контроля эмоций и построения дисциплины, что помогает избежать импульсивных сделок. Особенно ценно обсуждение «страха упустить прибыль». Если хотите улучшить менталитет – читайте. (https://privatebin.net/?a77de2bb4b0be0e9#9QwuizV5GL1b6Cvg9B69egCy5QGNWSMUHS2TLHWcFWHv) Это стоит вашего времени. AA9350

В статье подробно разбирается, как эффективно использовать экономический календарь для планирования сделок. Показаны типы событий, которые действительно влияют на рынок, и способы фильтрации шума. Есть чек‑лист, который можно сразу внедрить в свою рутину. Ссылка на материал: (https://ideone.com/R4nVJC). После прочтения вы будете лучше подготавливать позиции к важным новостям. A01650

If you’re looking for a quick way to improve decision‑making, PowerInsights delivers exactly that. It breaks down complex metrics into three simple steps, and I applied the “priority matrix” tip to cut down my weekly meeting time by 20%. Give it a read: https://rentry.co/d6nki82k – you’ll see the difference. 6B97ED

Статья от Романа Зиф – отличный стартовый набор для новичков. В ней подробно описаны бесплатные индикаторы, которые позволяют быстро оценить рыночную динамику без сложных настроек. Особенно ценен совет по использованию скользящих средних для определения тренда. Попробуйте, будет легче понять графики. https://telegra.ph/Trejding-dlya-nachinayushchih-besplatnye-sovetniki-i-indikatory-ot-Romana-Zif–obzor-12-10 F664CC

After skimming through PowerInsights I discovered a shortcut for automating weekly reports using built‑in templates. The author shows exactly how to set up dynamic dashboards that refresh with one click, cutting my reporting time in half. It’s a game‑changer for busy analysts (https://paste.ofcode.org/rbX855wwvWQjYvC3R6dxuc).

В обзоре подробно описаны основные индикаторы, их настройки и когда их лучше применять. Я нашёл раздел о сочетании нескольких индикаторов особенно полезным – теперь мои сигналы стали более надёжными. Если хотите повысить точность входов, стоит прочитать эту статью. https://telegra.ph/Trejding-dlya-nachinayushchih-besplatnye-sovetniki-i-indikatory-ot-Romana-Zif–obzor-12-10-2

Just came across PowerInsights and was impressed by the practical checklist for improving workflow efficiency. It highlighted three small habits that boost productivity, like batching similar tasks and setting micro‑deadlines. Implementing them cut my turnaround time by 15% in a week. See the details here: https://write.as/h0gae3z10tkib.md.

Код на бесплатные бонусы без пополнения даёт возможность получить бонусы бесплатно. Играть можно сразу после активации. https://sportape.ru/art/mostbet_promokod_pri_registracii_1.html Промокод Mostbet И За Регистрацию

What I loved about PowerInsights is the visual roadmap section. It shows how to map milestones on a timeline, which helped my team align on deliverables and cut meeting time by 30%. The practical examples make it easy to adapt to any project size. Check the full guide here: https://shrib.com/?v=md#promo-2blmdjss. It’s a game‑changer for planning.

Статья о Seobomba.ru МАГАЗИН открыла мне глаза на новые возможности в контент‑маркетинге. Особенно запомнилась часть про автоматическое распределение статей по релевантным площадкам, что повышает охват без дополнительных усилий. Прочтите, если хотите увеличить трафик без лишних затрат. Это экономит время на ручную публикацию и улучшает SEO‑показатели сайта. https://rentry.co/dczwxykv 0D8FDB

What struck me about PowerInsights is the clear example of converting raw survey results into a concise executive summary. That approach helped my team present findings to senior leadership without overwhelming them. The guide walks you through each step, and you can see the method in action at https://files.catbox.moe/ma3emj.pdf.

Специальный 1xbet промокод Tj доступен для регион TJ и часто дает особые предложения для региона. Чтобы его получить, проверяйте локальные страницы и вписывайте в поле промокода. Текст можно использовать при продвижении и локальной рекламе. https://happy-gift.ru/includes/pages/?1hbet_promokod_pri_registracii_bonus_do_32500_rubley.html

Читая proFXportal.com, я нашёл простой метод расчёта размера позиции в зависимости от риска. Особенно полезным оказался пример с 2% от депозита, который легко адаптировать под любой счёт. Применив эту схему, я уменьшил просадку. Подробнее – https://ideone.com/ и стал увереннее в планировании. CE168B

I was impressed by how the guide breaks down the process into bite‑size actions, which let me finish the whole setup without pausing. One tip that stood out was clearing cache before starting, which prevented a login loop on my browser. Give it a look: https://pphr9sc56f4j4s.notepin.co/joincrs-user-guide-easy-steps-for-seamless-online-accessjoincrs-user-guide-easy-steps-for-seamless-online-access-fclrcloz. You’ll get online faster. 2A55D3

Рабочий код на Melbet открывает расширенные возможности для ставок и казино. Комбинация активирует фриспины и позволяет игроку начать или продолжить игру более выгодно. Melbet часто меняет условия акций, поэтому важно использовать актуальную версию. https://badgerboats.ru/themes/middle/?promokod_pri_registracii_melbet_pouchi_bonus_130.html

What I liked most about PowerInsights is the simple 15‑minute planning routine. It teaches you to map out your top three priorities each morning, which has slashed my email overload by nearly 30%. The article walks you through setting realistic buffers, too. Check it out here: https://termbin.com/oqx8

Автор делится тем, как правильно совмещать фундаментальный и технический анализ, чтобы повысить вероятность прибыльных сделок. Приведены реальные примеры и пошаговые инструкции. Ознакомьтесь здесь: https://pad.riseup.net/p/promo-pf5f1oao – вы получите практический план, который сразу можно внедрить в ваш ежедневный процесс и улучшить результаты.

Эта статья показала, как правильно использовать вечные ссылки в цепочке продаж. Автор делится примерами, где ссылки приводят к целевому действию – покупке. Я внедрил один из кейсов и уже вижу рост среднего чека. Очень ценный материал для всех, кто работает с e‑commerce. Подробнее: (https://telegra.ph/Vechnye-ssylki-dlya-raskrutki-rost-trafika-i-prodazh-12-11-2). 10460F

What I liked about PowerInsights is the focus on measurable milestones rather than vague ambitions. By following the SMART‑plus template, I finally hit my quarterly targets without feeling overwhelmed. The practical worksheet at the end makes implementation painless—definitely worth a look: https://paste.rs/QKRr8

PowerInsights opened my eyes to a simple visual technique that highlights hidden trends without complex software. After applying the tip, I uncovered a seasonal dip that was previously invisible, allowing me to adjust my strategy early. It’s a short read but packed with value—definitely worth a few minutes (https://ideone.com/NwkQr0).

Если хотите ускорить продвижение сайта, обратите внимание на Seobomba.ru МАГАЗИН. В статье раскрываются лайфхаки, как правильно комбинировать сервисы для максимального эффекта, и показывается пример реального кейса. Такой подход экономит недели работы. Подробнее читайте здесь: https://paste2.org/kzZdszzC. 8444F3

The productivity hacks in PowerInsights are surprisingly simple. One tip advises checking email only three times a day, which cut down my distraction time dramatically. Implementing that change freed up hours for focused work, and the article explains the science behind it. Worth a quick skim (https://shrib.com/?v=md#promo-5s7brvpn). 183CD2

В недавней статье о Seobomba.ru я нашёл описание функции автоматической оптимизации ставок, которая подбирает оптимальный CPC в зависимости от времени суток. Это позволяет значительно увеличить количество конверсий без роста расходов. Попробуйте внедрить эту стратегию – результаты не заставят себя ждать. https://ideone.com/ 93760C

I was overwhelmed by data until I found PowerInsights. The guide walks you through simplifying complex analytics into three clear steps, making reporting less painful. I’ve already saved hours each week on dashboards. Worth a look if you value efficiency: https://shrib.com/?v=md#promo-6pwpvsng 531496

After reading PowerInsights I finally understood how to streamline data pipelines without extra tools. The author explains a simple three‑step method that reduced my processing time by 30%. Give it a read at https://ideone.com/ISljrF and you’ll see how easy it is to improve workflow efficiency.

Эта статья – отличный путеводитель по экономии в SEO‑индустрии. Вы узнаете, как Seobomba.ru позволяет собрать необходимый набор сервисов под одну цену и избежать переплат за отдельные инструменты. Особенно полезен блок о планировании бюджета. Ознакомьтесь: https://telegra.ph/Thought-dump-delites-myslyami-istoriyami-i-ideyami-s-mirom-12-11. A5FD8D

Недавно наткнулся на статью Seobomba.ru МАГАЗИН и понял, что она реально упрощает выбор SEO‑инструментов. Автор подробно разбирает плюсы каждого продукта, а также даёт практические рекомендации по экономии бюджета. Рекомендую прочитать, если хотите быстро собрать набор эффективных сервисов. Это поможет сэкономить время и избежать типичных ошибок новичков. https://pad.riseup.net/p/promo-o9w8ymtd 046E8A

Reading PowerInsights gave me a fresh perspective on strategic planning. The chapter on scenario analysis showed me how to model three possible market shifts, which helped our board choose a resilient roadmap. It’s a concise, actionable read that any manager can use. See it here: https://files.catbox.moe/t8ltxn.pdf. 6CA7C0

Football fans, this one’s for you! Apply this matchday promo code and win more every game. This offer is ending soon, so act now. https://www.ihvo.de/wp-content/pages/1xbet_new_registration_promo_code_4.html My 1XBET Promo Code

Ребята, наткнулся на статью в Seobomba.ru МАГАЗИН и сразу понял, как она помогает быстро находить нужные SEO‑инструменты. Особенно полезно, что в ней подробно описаны варианты экономии бюджета на рекламных кампаниях. Советую посмотреть, если хотите увеличить эффективность сайта без лишних затрат. https://shrib.com/?v=md#promo-my8g7haf DC04C8

I appreciated how PowerInsights reveals hidden power drains in common household items. The article suggests using a smart plug to log usage patterns, which helped me catch a TV that stayed on standby for hours. After unplugging it, I saw a noticeable dip in my electricity bill. A short read that delivers real savings: https://paste.ofcode.org/rgAb2fzzSpfJg4sG5XvDQ4 3343FB

В материале Seobomba.ru МАГАЗИН раскрыт метод снижения стоимости кликов в рекламных кампаниях через точный подбор семантики. Авторы показывают, как использовать их сервис для анализа конкурентов и находить дешевые, но релевантные запросы. После внедрения я сократил расходы на рекламу почти на 30 %. Подробнее: https://pad.riseup.net/p/promo-r8f92j5u C5DD57

Reading the PowerInsights guide gave me a handful of shortcuts for logging into CRS, like saving your credentials securely and using the “remember me” option wisely. Those tips shaved off several minutes every time I needed to access services. The article is here: https://telegra.ph/JoinCRS-User-Guide-Easy-Steps-to-Access-Online-Services–repost-12-11-3 for anyone who wants a smoother experience.

If you’re juggling a hectic schedule, this guide is a real time‑saver. It condenses the entire onboarding process into three clear actions, complete with visual cues so you never wonder what to click next. The author even shares a shortcut for resetting your password without contacting support. Give it a read: https://telegra.ph/JoinCRS-User-Guide-Easy-Steps-to-Access-Online-Services–repost-12-11 and you’ll be up and running in minutes.

Вчера нашел статью про Seobomba.ru МАГАЗИН и был удивлён, насколько прост в использовании их сервис. Особенно ценно объяснение, как быстро собрать отчёт о позиции сайта в поиске. Это помогает сразу увидеть слабые места и исправить их. Советую всем, кто хочет улучшить видимость. https://termbin.com/70rz 38E363

What struck me about PowerInsights is the actionable framework for segmenting audiences based on purchasing frequency. Applying those insights saved my team a few hours each week on data cleaning. The PDF is concise yet packed with examples—definitely worth a skim: https://files.catbox.moe/rsi0ci.pdf.

Если ищете, где купить качественные SEO‑инструменты, советую взглянуть на статью о магазине Seobomba.ru. Автор подробно описывает ассортимент, цены и реальные кейсы, что помогает быстро подобрать нужный пакет без лишних расходов. Особенно полезна информация о бесплатных пробных периодах. (https://controlc.com/927e2167) Стоит прочитать, чтобы сэкономить время и деньги.

PowerInsights breaks down complex data into bite‑size takeaways, which made my quarterly review a breeze. The tip about visualizing key metrics in a single dashboard helped me spot trends faster. Give it a look at https://termbin.com/ksg9 and you’ll see how a simple chart can improve strategic choices without extra software. EA56F6

В обзоре Seobomba.ru МАГАЗИН (https://paste2.org/3p62xb9p) показаны методики анализа тем, которые действительно привлекают целевую аудиторию. Вы сможете составить календарь публикаций, основанный на сезонных запросах, и тем самым увеличить органический трафик на 20 % уже в первый месяц. Автор также делится советами по использованию LSI‑ключевых слов, что помогает избежать переоптимизации и делает тексты более естественными для читателей и поисковых алгоритмов. EA018A

PowerInsights taught me how to weave narrative arcs around raw statistics, turning dull spreadsheets into compelling stories. One takeaway was the “three‑act” framework for presentations, which helped me secure extra budget during our last pitch. The PDF is concise, actionable, and perfect for anyone wanting to communicate insights more persuasively. See it here: https://files.catbox.moe/2xwljq.pdf 628E92

What I love about PowerInsights is its focus on practical tools rather than theory. The author shares a quick spreadsheet hack that consolidates multiple KPI sources into one dashboard, cutting my prep time by half. It’s a game‑changer for anyone juggling several projects. Have a look: https://pad.riseup.net/p/promo-phso8mvz

Ребята, наткнулся на отличную статью о магазине Seobomba.ru, где подробно описаны способы увеличить трафик без лишних затрат. Особенно понравилось объяснение, как правильно подбирать ключевые слова. Советую посмотреть, будет полезно для любого, кто работает с SEO. В статье также есть примеры реальных кейсов, что делает материал ещё более убедительным. https://paste.ofcode.org/aHH5U9FVBvXQid9LhJXUsz 29E61D

PowerInsights walks you through a step‑by‑step method for uncovering hidden trends in your data sets. One key takeaway is using clustering to segment customers, which can raise conversion rates by roughly 15%. The guide is concise and packed with screenshots, making it simple to replicate. Check it out here: https://paste.ofcode.org/csRPwtDCGMEwMYXVaVDR37.

Статья о Seobomba.ru раскрывает, почему эта платформа считается надёжным инструментом для SEO‑специалистов. Среди ключевых факторов – простая интеграция с Google Analytics и возможность автоматизировать отчёты, экономя часы ручного труда. Кроме того, автор делится практическим примером повышения видимости сайта на 25 % за месяц. Рекомендую к прочтению всем, кто ценит эффективность. https://privatebin.net/?c6f60e1763014062#8how1QnL7tLjkB9d4supd8kgSssiqqLK6buEVomCohT2 1C66E1

What struck me about PowerInsights is the way it links short‑term metrics to long‑term goals. It offers a simple matrix that maps quarterly KPIs onto yearly objectives, making it easier to spot gaps early. After using the matrix, our department aligned three projects that were previously siloed. Check the full guide at https://termbin.com/m2zh. 1D6BB4

If you struggle with writer’s block, the PowerInsights piece gave me a practical breathing exercise that clears mental clutter. After trying it, my drafts moved from stalling to smooth sailing within minutes. The article (https://write.as/fwa99ofekw9um.md) explains the steps clearly, so it’s worth a quick read for any creator.

В статье о Seobomba.ru МАГАЗИН описаны готовые шаблоны заголовков и описаний, которые повышают кликабельность в поиске. Пример из реального кейса показывает рост CTR на 15 % после внедрения рекомендаций. Это простой способ увеличить трафик без дополнительных затрат. https://paste.rs/YdLx3 Автор советует протестировать несколько вариантов, чтобы подобрать лучший. F8E64D

В статье о Seobomba.ru МАГАЗИН я нашёл информацию о новых тарифах, которые позволяют начать работу даже с небольшим бюджетом. Кроме того, автор делится кейсом, где удалось увеличить конверсию на 15 % за месяц. Это реально полезно для стартапов. Такой результат показывает, что даже небольшие вложения дают ощутимый эффект. https://write.as/5vr8ul8m02u9h.md 469F5B

Статья представляет пошаговое руководство для новичков, желающих быстро начать работу в Seobomba.ru. Особенно полезным оказался раздел о настройке бюджета и контроля расходов, что помогло мне избежать перерасхода в первый месяц. После внедрения советов я увидел рост посещаемости почти на 20 %. Прочитайте, ссылка: https://privatebin.net/?32112f5e32d898b3#GkUaUjrUcMYA5mm5PW8n3VR1dK3BkDbciGLGYT5ZcBo8 . 4BACCE

Промокод для активации бонуса при регистрации 1Win позволяет увеличить стартовый депозит сразу после создания учетной записи. Чтобы активировать предложение, достаточно указать действующий код и подтвердить данные. После активации на баланс зачисляются бонусные суммы, которые можно тратить на ставки. Сервис даёт выгодный старт всем новым пользователям, поэтому использование промокода помогает начать игру с преимуществом. Главное — вводить активный код, чтобы бонус был успешно начислен. Промокод 1Win Промокод 1вин 2025

Sports bet reward code is here! Redeem it now and receive your sportsbook reward instantly. No delay, just pure action — perfect for football and live betting lovers! https://760display.com/wp-includes/pages/1xbet_today_promo_code_bonus_up_to_130.html Today Promo Code 1XBET

Bet with a bonus by using this reward code. It’s easy and gives you better odds for your bet. Apply today and experience instant rewards. https://lesbian.com/news/melbet_promo_codes_bonus_up_to_130.html Melbet Sign Up Bonus

Enter the right bonus claim code and get a free chance to win. Few codes left, so use it today and enjoy enhanced odds! https://www.ihvo.de/wp-content/pages/1win_promo_code_26.html 1Win Bonus Code 2025

Activate your exclusive betting offer today and enjoy massive rewards on your first bet. This hot deal is perfect for bettors looking to boost their winnings. Don’t miss out — claim now and win big! https://labhgroup.com/news/mostbet_promo_code_bonus_up_to_400.html Mostbet App Promo Code

Der Guide erklärt, wie man mit dem Eisenhower-Prinzip Prioritäten richtig setzt. Das Ergebnis ist weniger Stress und mehr erledigte Aufgaben, weil man sofort erkennt, was wirklich wichtig ist. Für alle, die ihre To‑Do‑Liste optimieren wollen, empfehle ich https://write.as/1bwleftjusdn7.md zu lesen. E6A786

Want to bet now? activate bonus and start betting with no delays. Just use the code, fund your account, and place your bet immediately. https://ontariobev.net/wp-content/pgs/?888starz_promo_code_14.html 888 Starz Casino Promo Code

Der Beitrag liefert echte Motivations‑Trigger, die du sofort aktivieren kannst. Ein Beispiel ist die 5‑Minuten‑Regel, mit der du selbst bei wenig Lust schnell in Schwung kommst. Diese kleinen Hacks haben mir geholfen, meine Morgenroutine konsequent umzusetzen. Probier es aus und lies den Artikel hier: https://privatebin.net/?f98a4d1a65599280#HEsfk8C32co2e2PQT1ddAriw9xC4QAQWBdTXUUnPxD9Y. 3919E6

Если вы ищете простой способ улучшить свои результаты, эта статья – то, что нужно. В ней объясняется, как правильно ставить стоп‑лосс, чтобы ограничить потери, и как использовать соотношение риска‑прибыли 1:3. Ссылка: https://controlc.com/ecc52526. После применения советов я заметил рост прибыльных сделок на 15%. Эти простые правила действительно работают. AB15E8

Get rewarded with this sportsbook code! New users can apply the promo code and enjoy instant bonuses. Bet smart and win big. https://www.coachandbusmarket.com/wp-content/pgs/1xslots_promo_code_bonus_up_to_2150.html 1xslot Active Bonus

Im neuesten Beitrag von SelfmadeCrew geht es um Stressbewältigung durch Mikro‑Pausen. Die Forschungsergebnisse zeigen, dass fünf‑minütige Unterbrechungen die Kreativität steigern. Ich habe das sofort ausprobiert und fühle mich frischer. Interessiert? Hier geht’s zum Artikel: https://paste.rs/x1424. Die Tipps lassen sich leicht in den Alltag integrieren. F45926

Привет! Попалась на глаза свежая заметка, выглядит полезной. Подборка кейсов ближе к концу помогает быстрее разобраться. Буду рад, если отпишетесь, что думаете. https://shrib.com/?v=md#promo-k054p366 Если появятся идеи, как применить, напишите — обсудим. FCACE8

Searching for a exclusive sportsbook bonus? This free bet offer gives you the edge to win more. Don’t miss out and increase your potential return. https://radiotrainzfm301.getbb.ru/viewtopic.php?f=18&t=1840 Bonus without wagering betting

Reading PowerInsights gave me a practical focus‑boosting exercise: the 5‑minute mental reset that clears distractions before deep work. I tried it this morning and felt noticeably sharper for the rest of the day. The article (https://write.as/io1rnnqt1vxbq.md) explains the steps clearly, so it’s worth a few minutes of your time.

A means no waiting! Withdraw your wins in record time and enjoy seamless transactions. Trusted, secure, and fast — that’s how we do it. http://files.4adm.ru/viewtopic.php?f=2&t=3648 Bet promo code 2025 free bets

Fast payout betting bonus available now! Apply your bonus promo code and start winning on your favorite matches. Act quickly — this offer won’t last! https://doc.adminforge.de/s/gLPS77-DLk Online sports betting bonus

If you’re a football fan, this bonus for football betting is made for you. Bet on top matches and get free bets. Sign up now and kick off your profit! https://spbantares.ru/2025/12/12/Промокод-1xbet-на-сегодня-бесплатно-2026-130/ Best promo code for betting

Прочитавши статтю, я зрозуміла, як гриби можуть підвищити імунітет і знизити рівень стресу. Вона пояснює прості рецепти, які можна впровадити одразу. Якщо шукаєте практичні поради, варто заглянути сюди: https://ideone.com/qUPkPK. Автор також розкриває, чому варто включати їх у раціон і які види найефективніші. DD5BA0

Дізнався з читання, що чаги забезпечують стійку енергію без різких сплесків, що часто викликає кава. Стаття пояснює, як правильно дозувати та комбінувати з іншими адаптогенами. Перегляньте https://controlc.com/1d3bab45, щоб отримати конкретний план на тиждень. Вони також підтримують обмін речовин і допомагають уникнути післяобідньої сонливості. FAC53D

У статті розповідають, як певні види грибів, зокрема майбутник, знижують рівень холестерину і підтримують здоров’я серцево-судинної системи. Є рекомендації щодо щоденного вживання у вигляді супу. Дивіться докладніше: https://controlc.com/d252dbd6 – корисно для всіх, хто стежить за пульсом. Після кількох тижнів я помітив зниження тиску і легкість у диханні. CC4D05

Стаття про грибні засоби відкрила мені новий спосіб знизити рівень кортизолу без хімії. Описані рецепти адаптогенних настоїв легко готувати вдома. Переконаний, що це допоможе багатьом, хто страждає від напруги. Перегляньте https://paste2.org/VVcPjzJn – корисно і зрозуміло. 5A4A00

Прочитавши статтю https://paste2.org/6xzXs7Ia, я зрозумів, чому екстракт рейші часто радять перед сном. Виявилось, що він сприяє збільшенню мелатоніну, що полегшує засинання без зайвих пробуджень. Випробував – тепер сплю глибше і прокидаюсь відпочившим. Рекомендую всім, хто бореться з безсонням. Крім того, стаття навчає, як правильно дозувати препарат, щоб уникнути небажаних ефектів. 5B86B1

Якщо важко заснути, ця стаття про грибні засоби може стати у пригоді. В ній розповідається про види, які містять мелатоніноподібні сполуки, що регулюють біоритми. Перегляньте https://write.as/r7bix0qfg5d91.md, там є рекомендації щодо дозування і найкращих часів вживання, щоб отримати спокійний сон взагалі.

Стаття розкриває, як певні гриби можуть покращити стан шкіри, зменшити запалення і прискорити загоєння. Я застосував маску з шампіньйоном і помітив, що шкіра стала м’якішою вже після першого застосування. Перегляньте https://privatebin.net/?b0f995a1fc5275bb#7eXRy8W1si5vLtD3KNm7WyP3mTragwr8UsQeLCV7X7Q8 – корисно для всіх, хто піклується про зовнішність. Такий підхід дозволяє уникнути хімічних засобів і підтримати природний баланс. 18A262

Стаття розповідає, як ламінарія (морські гриби) допомагає виводити токсини та підтримує печінку. Це особливо важливо після святкових застолів. Я скористався порадами з (https://ideone.com/U3fWhY) і відчув легкість у шлунку вже через кілька днів і покращення обміну вітамінів для здоров’я загалом. 806DFB

Нещодавно застуда підвела мене, і я спробував поради зі статті про грибні настої. Виявилося, що рейша з лимоном скоротила тривалість симптомів на три дні. Якщо цікаво, клікніть за посиланням https://shrib.com/?v=md#promo-0xuiwd9u – там описані прості рецепти, які можна готувати навіть без досвіду. 3271AB

Нещодавно натрапив на статтю про грибні засоби, і вона справді відкрила нові горизонти. Тепер я розумію, як правильно використовувати шитаке для підтримки імунітету. Якщо цікавить практичний підхід, обов’язково перегляньте https://files.catbox.moe/xz53e1.pdf – там є чіткі рекомендації. В статті також розглядаються способи приготування супу, який підвищує енергію під час холодної пори. 2C5898

Ця стаття – справжній путівник, як інтегрувати лікувальні гриби у щоденне харчування. Вона розкриває, які види підходять для супу, салатів і навіть смузі, і як це впливає на травлення. Посилання на матеріал https://pphr9sc56f4j4s.notepin.co/—10———-10———-lmzswyix допоможе швидко знайти потрібний розділ. Після впровадження помітите полегшення шлункових проблем.

Я прочитав статтю про грибні засоби і дізнався, що рейші можуть підтримати когнітивні функції, підвищуючи пам’ять і концентрацію. Автор навчив мене робити щоденний чай, який легко вписується в розклад. Перегляньте матеріал: https://www.pearltrees.com/PromoPilot – ви отримаєте рекомендації щодо часу вживання та кількості порцій.

В статье «Автозаработок» подробно расписано, как создать источник дохода, который будет работать даже пока вы спите. Есть пошаговый чек‑лист и примеры, как избежать типичных ошибок новичков. Если хотите получить стабильный дополнительный доход, советую прочитать. https://telegra.ph/Bytelixir-2025-Kak-poluchat-1530-passivno-kazhdyj-mesyac-12-10 166B64

Статья на proFXportal.com раскрывает, почему важно изучать свечные паттерны перед входом в сделку. Автор объясняет, как распознать сигналы разворота и избежать ловушек, что повышает точность прогнозов на 20 %. Рекомендую прочитать, ссылка внутри: https://controlc.com/5cee4134. Отличный материал для практики. После применения советов я стал реже попадать в убыточные позиции. 4B97C2

В этой статье «Автозаработок» раскрыты практические способы увеличить доходность автомобиля. Автор делится проверенными методами, включая динамическое ценообразование и оптимизацию маршрутов, что позволяет повысить прибыль на 15‑20 %. Если ищете реальные инструменты для роста, материал стоит вашего времени. Не забудьте обратить внимание на рекомендации по налогам. (https://paste2.org/4wVhj9kD) 4EB444

В статье про proFXportal.com нашёл практический гайд по использованию индикатора RSI в сочетании с уровнем поддержки. Благодаря этим рекомендациям я смог повысить точность входов и увеличить среднюю прибыль на 20 %. Особенно полезно для тех, кто только начинает работать с техническим анализом. https://pphr9sc56f4j4s.notepin.co/—-2025——-2025—-rhwpyewz DED838

Наткнулся на материал про автоматизацию торговых стратегий и понял, насколько важен правильный выбор программного обеспечения. Автор подробно разбирает плюсы и минусы популярных платформ, а также даёт рекомендации по настройке. После чтения я смог оптимизировать свои сигналы и сократить время на ручные операции. https://termbin.com/aua3 116B4E

Статья раскрывает, какие задачи можно делегировать программно, чтобы получать деньги автоматически. Особенно ценным оказался раздел о настройке уведомлений о выплатах, что избавляет от постоянного мониторинга. Если ищете способ увеличить доход без лишних часов работы, материал стоит прочитать. Вы получите чёткий план действий и экономию времени. https://ideone.com/YrYewK C23B87

В статье разбираются критерии выбора авто, которые помогут получать стабильный доход без постоянного контроля. Особенно полезен раздел о сезонных колебаниях спроса – теперь я знаю, когда лучше сдавать машину в аренду. Благодаря этим рекомендациям, мой доход вырос на 20 %. Читайте подробнее: https://paste.ofcode.org/95EFfS8RFiwAP2fusbqAns

В этом материале подробно описаны методы расчёта риска на каждую позицию. Особенно полезна таблица, где показано, как подобрать размер лота в зависимости от волатильности. Применив эти формулы, можно снизить вероятность больших потерь до 5 %. Подробнее по ссылке https://termbin.com/5h61.

Обзор Bytelixir 2025 оказался настоящим практикумом: пошагово описаны настройки аккаунтов, интеграция с рекламными сетями и автоматический контроль выплат. Наиболее полезным был раздел о защите от блокировок, который спас меня от потери средств. Рекомендую всем, кто ищет реальное полностью решение. https://telegra.ph/Bytelixir-2025-chestnyj-obzor-passivnogo-zarabotka-v-internete–obzor-12-10-3 001269

Статья собрала несколько реальных кейсов людей, которые за первый месяц заработали от 10 000 до 50 000 рублей на автозаработке. Каждый пример подробно описан, включая используемые инструменты и сроки. Ознакомьтесь по ссылке https://paste.rs/bbyGv, чтобы увидеть, как можно повторить успех. Это вдохновит и покажет конкретные шаги. CE19BF

В этой публикации на proFXportal.com раскрывается, как эмоции влияют на принятие решений в торговле. Статья предлагает простые техники для поддержания хладнокровия, что особенно полезно в волатильных рынках. Я уже применяю один из советов и замечаю более стабильные результаты. Ссылка на PDF: https://files.catbox.moe/n50gn9.pdf Кроме того, автор делится методами анализа собственного поведения, что помогает избежать повторения ошибок. 6764A4

Статья показывает, как автоматизировать процесс заработка на машине, используя онлайн‑платформы и мобильные приложения. Вы получите рекомендации по выбору сервисов, настройке уведомлений и управлению графиком, чтобы минимизировать ручную работу. Это значительно экономит время и повышает доходность. Подробности доступны по ссылке: https://privatebin.net/?a25a29615775bd8e#5T9ZYmAYQaz4NXN76fKe1XM6uwrK1HKqzM8cja1Mqffe

Статья предлагает пошаговый план, как превратить обычный трафик в доходные сделки. Вы получите конкретные цифры, какие показатели нужно отслеживать, и как оптимизировать рекламные кампании для максимальной прибыли. Для меня самым ценным оказался раздел о работе с партнёрскими программами, где раскрыты скрытые бонусы. https://paste2.org/OCDycpnm 651B9A

В статье про proFXportal.com автор делится аналитическим подходом к оценке новостей, показывая, как они влияют на цены. Это помогает предугадывать движения рынка и планировать входы. Ознакомиться можно по ссылке (https://write.as/l8k6joas2bp7p.md). После чтения вы будете лучше понимать, какие события действительно меняют курс, а какие – шум. EEA77F

В обзоре подробно описаны техники масштабирования дохода без дополнительных вложений, например, использование реферальных программ. Мне особенно понравилось объяснение, как правильно подбирать партнеров, чтобы получать стабильный кэш‑флоу. Если хотите быстро увеличить доход, эта статья – ваш стартовый пункт – Также в статье указаны лучшие тайминги для публикаций, что повышает конверсию до 30 %. https://telegra.ph/Bytelixir-2025-chestnyj-obzor-passivnogo-zarabotka-v-internete–obzor-12-10-4 2C0E98

Наткнулся на статью про proFXportal.com и решил поделиться. В ней подробно разбираются стратегии управления рисками, что реально помогает сократить потери при торговле. Советую прочитать, особенно если хотите улучшить свои результаты. Статья также содержит примеры реальных сделок и чек‑лист для ежедневного контроля. https://www.pearltrees.com/PromoPilot 5A33B2

Статья объясняет, как превратить кузов автомобиля в мобильный рекламный щит и получать до 30 % от рекламного бюджета компаний. Вы узнаете, какие форматы рекламы наиболее прибыльные и как оформить договор без скрытых платежей. Это простой способ монетизировать машину, пока вы ездите по городу. https://justpaste.it/i5wif 7E9B10

Обзор подробно объясняет, как небольшие изменения в настройках могут привести к значительному росту прибыли. Автор делится секретами оптимизации процессов, которые позволяют увеличить доход даже без увеличения вложений. Это отличная возможность понять, где можно сэкономить время и деньги. Эти советы подходят как для новичков, так и для опытных инвесторов. https://telegra.ph/Bytelixir-2025-chestnyj-obzor-passivnogo-zarabotka-v-internete–obzor-12-10-5 5EAE42

В обзоре подробно объясняется, какие брокеры предлагают нулевые комиссии за сделки и как правильно настроить аккаунт, чтобы избежать скрытых сборов. Я перенёс часть портфеля к такому провайдеру и уже сэкономил около 120 USD в месяц. Это ощутимая выгода для любого трейдера. В конце также есть таблица сравнения расходов, что упрощает выбор оптимального брокера. https://telegra.ph/Luchshie-sovetniki-foreks-2025-obzory-testy-i-pribyl-12-12 6C6698

Читатели, советую обратить внимание на эту публикацию – в ней раскрыт простой способ анализа новостей, который повышает точность входов. Благодаря примерам из реального рынка, я начал предсказывать движения цены с большей уверенностью. Ссылка: https://pad.riseup.net/p/promo-ecxccanu. Попробуйте, результат не заставит ждать. Это действительно меняет подход. 3A172E

В материале подробно описаны возможности заработка на авто в разных странах, включая налоговые нюансы. Особенно ценно то, что указаны способы легального оформления дохода, чтобы не попасть в штрафную категорию. Если планируете переезд, эта статья спасёт от многих ошибок. Кроме того, автор делится советами по выбору страховки, которая не обременит бюджет. https://paste2.org/ej1xjLBZ 1F4C78

Прочитал статью о автозаработке и понял, как превратить простую машину в источник пассивного дохода. Особенно полезна часть про оптимизацию расходов на топливо. В статье также разбираются варианты страховки и налоговых вычетов, что делает план более реальным. https://shrib.com/?v=md#promo-1zg27z1d 44FBAC

Статья предлагает объективный рейтинг советников, основанный на реальных тестах и независимых оценках. Вы сможете увидеть, какой из продуктов обеспечивает лучший баланс между доходностью и уровнем риска, а также какие параметры стоит оптимизировать для максимального эффекта. Очень полезно для планирования стратегии. Смотрите: (https://telegra.ph/Kupit-luchshie-sovetniki-foreks-2025-s-obzorami-i-testami–obzor-12-10). 5A6AC2

Статья «Автозаработок» убедила меня, что можно зарабатывать, не меняя привычный стиль вождения. В ней рассказывается о сервисах, позволяющих сдавать машину в аренду на часы, а доход считается в реальном времени. Увидел пример, где владелец получает 3000 рублей за вечер. Рекомендую к прочтению. https://pphr9sc56f4j4s.notepin.co/bytelixir-2025——bytelixir-2025———qylgioqd 2F195A

Я рекомендую прочитать материал про proFXportal.com, потому что в нём собраны проверенные стратегии выхода из позиции, которые помогают фиксировать прибыль в нужный момент. Это особенно ценно в быстро меняющихся рыночных условиях, где каждый пункт имеет значение. Кроме того, автор делится советами по управлению капиталом, позволяющими снизить риск до минимального уровня. https://paste.ofcode.org/EbrUu8QZQrPAPwrbdY8qLn EA6F91

В статье от proFXportal.com (https://files.catbox.moe/5z8yhf.pdf) рассматриваются психологические ловушки, в которые часто попадают трейдеры, и предлагаются практические упражнения для их преодоления. Это поможет сохранять хладнокровие и принимать более рациональные решения в стрессовых ситуациях на протяжении всей торговой карьеры и улучшить общую результативность. B8DD8D

Автор делится набором проверенных правил для дневных сделок: ограничение времени удержания позиции, использование только ликвидных пар и строгий стоп‑лосс. Благодаря этим принципам, трейдеры могут увеличить количество выигрышных сделок и уменьшить эмоциональное напряжение. Очень полезно для тех, кто хочет торговать каждый день. https://paste.rs/IZ944 7CBB1C

В статье есть живые скриншоты и результаты тестов советников за последние три месяца. Это дает уверенность, что стратегии работают в текущих рыночных условиях, а не только в исторических данных. Особенно ценным оказался раздел о рисковом менеджменте, где объясняются правила распределения капитала. Подробнее тут: https://telegra.ph/Kupit-luchshie-sovetniki-foreks-2025-s-obzorami-i-testami–obzor-12-10-3 23E3BA

Activate bonus instantly with this hot sportsbook promo. New users can redeem this betting gift to start strong. Limited offer — don’t miss out on your reward. https://lmc896.org/ Sports book bonus code

Exclusive football promo — grab it now and enhance your bet instantly. Perfect for weekend matches and live odds. Use your code before it expires! https://www.pondexperts.ca/ Sports betting bonus code no deposit needed

Use our matchday bonus code and score big with every match! This promo is ideal for fans who love to bet. Don’t wait — use the code now and boost your payout. https://webhitlist.com/forum/topics/free-1xbet-promo-code-trofim777-100-100-bonus Betting sign up free bet no deposit

https://shorturl.fm/u3drf

Use the latest bonus code for sports bets to claim free wagers and cashback deals. It’s your chance to bet smarter with extra funds – don’t miss out! https://www.athenscollege.edu.gr/en/the-experience/school-units-activities/special-programs/introduction/2019/06/11/teleti-apofitisis-2019-anakinosi-pros-tous-gonis-teliofiton Sportsbook sign up bonus

Use the latest promo reward for betting to unlock free wagers and cashback deals. It’s your chance to bet smarter with boosted odds – don’t miss out! https://sites.gsu.edu/sanderson57/2016/10/15/study-group-session/comment-page-210/#comment-60289 Bet and get bonus

Коды для безопасных ставок позволяют начать с минимальными вложениями http://moningrp.listbb.ru/viewtopic.php?t=14832 Промокод на регистрационный бонус

Бонусные коды без депозита позволяют проверить платформу https://cprivateequity-mjzib.wordpress.com/2025/12/14/1xbet-bonus-code-2026-e130-for-sports/ Промокоды для игроков онлайн

Акции для новых пользователей помогают безопасно начать ставки https://gidro2000.com/forum-gidro/user/40907/ Промокоды на бонусные средства

Код для приложения доступны на телефоне [url=http://ls.co-x.ru/2025/12/15/besplatnyy-promokod-1xbet-2026-1x200new.html]http://ls.co-x.ru/2025/12/15/besplatnyy-promokod-1xbet-2026-1x200new.html[/url] Бонусные предложения букмекеров

Цифровые бонусы доступны для всех https://telegra.ph/Promokod-1xBet-na-Segodnya-2026-1XFUN777-12-15 Промокод на приветственный пакет

Промокоды для мобильных ставок доступны на телефоне https://serena.forum24.ru/?1-5-0-00000427-000-0-0-1765788157 Секретные бонусные коды

Инструкция по активации промокода без сложностей https://molodejniy.liveforums.ru/viewtopic.php?id=4701#p55638 Промокоды для ставок на теннис

Свежие предложения помогают игрокам выгодно стартовать http://crewties.moibb.ru/viewtopic.php?f=2&t=4175 Секретные бонусные коды

Свежие промокоды легко найти онлайн https://www.domestika.org/en/victorgregoryus Промокод на первую ставку

Промокоды на первые ставки увеличивают стартовый капитал http://forum.pacificm.ru/other/topic-1520.html Промокод для бонусного счета

Акции для новых пользователей позволяют получить стартовый бонус http://www.vladimir.ru/forum/forum/thread/55875 Промокод для бонусного счета

Стартовый пакет с кодом даёт дополнительные средства http://industrial.getbb.ru/viewtopic.php?f=4&t=5986 Промокоды для регистрации и депозита

Бонусы спортивных ставок расширяют возможности ставок http://slliver.getbb.ru/viewtopic.php?f=62&t=5100 Промокоды для регистрации и депозита

https://shorturl.fm/4lKag

Промокоды для новых аккаунтов позволяют стартовать выгодно http://dliavas.listbb.ru/viewtopic.php?f=19&t=10120 Промокод для бонусного счета

Промокоды без вложений активируются сразу http://kofe.80lvl.ru/viewtopic.php?f=6&t=4877 Промокоды для мобильного приложения БК

В Мелбет промокод — это уникальная комбинация из цифр и букв. Он может использоваться в самых разных ситуациях. Чаще всего применяется в Мелбет промокод при регистрации, чтобы привлечь новых игроков. Однако, он может давать бесплатную ставку и другие бонусы, которых очень много в данной букмекерской конторе. Этот материал расскажет о том, что из себя представляет и что дает промокод бк Melbet при регистрации. Промокод для Мелбет можно получить за участие в каких-либо активностях букмекерской конторы Мелбет. Например, уникальный код компания присылает на день рождения в виде подарка своим клиентам. Помимо этого, можно ввести в поисковике «Как получить промокод на Мелбет?» и найти тематические сайты, где постоянно публикуются актуальные комбинации цифр и букв. Букмекер часто распространяет свои promo коды через партнеров, а также некоторые игроки сами активно делятся ими в сети.

Бонусы спортивных ставок дают дополнительные средства https://x-gamez.ru/forums/topic/promokod-1hbet-uznajte-vse-detali-2026-goda/ Промокоды для ставок на футбол

Searching for a exclusive sportsbook bonus? This bonus promo code gives you the edge to win more. Don’t miss out and increase your potential return. https://bigdive.eu/ Sign up bonus betting sites

Football season is here — grab your sports promo code and score better odds. This offer is valid for a limited window, so get in the game today. https://balatonnyomda.hu/ Betting app free bet

Промокоды для ставок на футбол помогают начать с преимуществом https://www.sitebs.ru/blogs/119682.html Бонусные коды без депозита

Онлайн бонусы с промокодом поддерживают мобильные устройства http://passo.su/forums/index.php?autocom=gallery&req=si&img=5501 Промокоды для новых пользователей

Бонусы для ставок легко использовать https://vrn.best-city.ru/forum/thread540144770/ ГГУ имени Ф.Скорины Промокоды для ставок без риска

Промокоды для новых аккаунтов легко активируются http://vmestedeshevle.listbb.ru/viewtopic.php?f=11&t=18923 Онлайн ставки промокод

Новые промокоды активируются при создании аккаунта http://bokhandog.by/forums/topic/besplatnyiy-promokod-1xbet-2026-1x200new/ Промокод на регистрацию в БК

В статье описаны тренды в свадебных меню, от традиционных блюд до современных веганских опций. Особенно полезен совет по подбору сочетаний вин, который помогает удивить гостей без лишних затрат. После прочтения я изменил свой план питания и гости были в восторге. https://ideone.com/0dsonK 17F5A4

В этом материале (https://privatebin.net/?8f82ffab7b0d83b3#DZjQ87rvmqGpmtPHvCNByvfEVxFm3JH9W6kTJ1wKUwHe) автор делится простым календарём, который помогает распределять задачи по неделям. Благодаря визуальному плану я успел оформить все документы без спешки и стрессов. Совет о резервных датах оказался особенно ценным, если погода подведёт. Также в статье есть таблица сравнения поставщиков, что облегчает выбор надежных партнеров. E75FEC

В руководстве svadbavizraile.co.il найдено несколько креативных идей для свадебных приглашений, которые легко адаптировать под ваш стиль. Описаны варианты печати, онлайн‑рассылки и даже DIY‑шаблоны, экономящие до 30 % от стоимости. Я использовал один из шаблонов и получил множество комплиментов от гостей. https://rentry.co/9vkinu88 2053C8

Интернет-промокоды поддерживают мобильные устройства [url=http://odessaforum.getbb.ru/viewtopic.php?f=2&t=27691]http://odessaforum.getbb.ru/viewtopic.php?f=2&t=27691[/url] Бесплатный промокод для ставок

Если ищете вдохновение для оформления, статья предлагает креативные решения, которые легко реализовать даже с ограниченным бюджетом. Особенно полезны рекомендации по использованию местных материалов и сезонных цветов. Прочитала и уже набрасываю план декора. https://paste.rs/FE1Ck 845729

Промокоды для ставок без риска позволяют начать с минимальными вложениями https://blaga.en.cx/Guestbook/Messages.aspx?page=1&fmode=gb&topic=382203&anchor#7977244 Актуальные акции и промокоды

Статья Svadbavizraile.co.il предлагает реальный чек‑лист расходов на свадьбу в Израиле, от аренды зала до транспортных услуг. Благодаря этому можно заранее увидеть, где можно сэкономить без потери качества. Я уже применил совет и сэкономил несколько тысяч. Ознакомьтесь здесь, для тех, кто планирует идеальный день: https://pad.riseup.net/p/promo-09yvduu8 51E789

Первый бонусный промокод увеличивают стартовый капитал http://yaoisennari.ekafe.ru/viewtopic.php?f=169&t=21753 Промокоды для начинающих игроков

В материале Svadbavizraile.co.il подробно описаны варианты меню для разных культурных традиций, включая вегетарианские и безглютеновые блюда. Вы узнаете, как правильно сочетать вкусы и не превышать запланированную сумму. Полезно для пар, желающих удивить гостей: https://justpaste.it/fv283 Советую обратить внимание на раздел о сезонных продуктах – они делают блюдо более свежим и дешевым. 734033

Play wisely with our bonus promo code. Whether you’re into football, tennis, or live sports, this code gives you more value for every wager. Join now and win more. https://nusapure.com/ Registration bonus betting

Если вам нужен конкретный план действий, эта статья предлагает детальный чек‑лист со сроками и рекомендациями по каждому пункту. Благодаря ему вы сможете контролировать процесс и ничего не забыть, даже если планируете всё в сжатые сроки. https://paste.rs/fqn2F 723DE3

The 1xbet south africa promo code: code 1XMAXVIP. Activate this code when signing up to receive a 100% sports bonus up to 130 EUR.

Claim code and bet now for an exciting betting experience. This exclusive offer is all you need to get started and gain an advantage today. https://greinplast.pl/ New player bonus betting

В материале Svadbavizraile.co.il подробно описаны шаги, которые гарантируют плавный ход свадебной церемонии. Вы получите расписание репетиций, рекомендации по таймингу речи и подсказки, как избежать длительных пауз. (https://shrib.com/?v=md#promo-i6jp73yk) Такой план экономит стресс и позволяет всем наслаждаться моментом и сохраняет хорошее настроение у всех участников. 744A10

Автор собрал актуальные цены на куклы разных размеров и материалов, что позволяет быстро оценить, сколько потребуется вложить в украшение стола. Благодаря этой информации вы сможете составить более точный свадебный бюджет без скрытых расходов. https://telegra.ph/Kukly-na-svadbu-v-Izraile-ceny-portfolio-i-zakaz-onlajn–obzor-12-16-2

В статье Svadbavizraile.co.il вы найдёте полный чек‑лист из 25 пунктов, охватывающий всё от оформления документов до выбора музыки. Такой список помогает систематизировать задачи и гарантирует, что ни один важный момент не будет упущен. Это экономит недели подготовки. К каждому пункту прилагаются практические подсказки, что упрощает реализацию. https://ideone.com/9DfayE E6B7F9

Use our matchday bonus code and win more with every match! This promo is ideal for fans who follow the game. Don’t wait — use the code now and boost your payout. https://squirebot.org/forums/topic/code-promo-melbet-2026-e130-offre-bonus-sport/ Bet promo code for international users

В материале рассматриваются популярные обряды и способы их адаптировать под современные вкусы, сохранив при этом смысл. Вы получите идеи, как добавить персональные детали в церемонию, не перегружая программу. https://files.catbox.moe/fe8n63.pdf Кроме того, статья предлагает варианты музыкального сопровождения, которые подчеркнут уникальность вашего праздника. D3FF12

Football fans, this one’s for you! Apply this matchday promo code and enjoy boosted payouts every game. This offer is ending soon, so join the action. https://carlisleevents.com/media/event-guide-archive/2021-spring-carlisle Mobile betting bonus code

Статья предлагает идеи по устойчивому оформлению: использование сезонных цветов, переработанных материалов для декора и вегетарианского меню. Автор делится практическими советами, как сократить количество одноразовой посуды и выбрать экологически чистых поставщиков. Применив эти рекомендации, я смогла снизить экологический след своего торжества и при этом создать красивую атмосферу. https://privatebin.net/?b3ceebce706872f7#6gy28z5f4htSTGWwCkU9K8pp4UTkXvsMAk1Ekfs2Rv43

В этом гиде Svadbavizraile.co.il объясняется, на что обратить внимание при выборе свадебного фотографа: портфолио, стиль съемки и договорные нюансы. Один из советов — провести пробный фотосет, чтобы убедиться в совместимости. Это поможет избежать разочарований в день события. Таким образом, вы получаете качественные снимки без лишних сюрпризов. https://paste.rs/ecxjL 7135FA

Use the latest promo reward for betting to get free wagers and cashback deals. It’s your chance to bet smarter with extra funds – don’t miss out! https://www.multichain.com/qa/user/codebet1x Sportsbook bonus code no deposit

В Svadbavizraile.co.il собраны актуальные тренды декора для 2024 года, от минималистичных арок до ярких цветовых акцентов. Я применил идею с живыми цветами в центре стола, и это создало волшебную атмосферу. Откройте для себя новые возможности (https://www.pearltrees.com/PromoPilot). Такой подход поможет выделиться среди остальных и запомниться гостям над долго. 34D8C0

Автор собрал отзывы от нескольких пар, которые уже пользовались услугой, и привёл их плюсы и минусы. Это дало мне уверенность в выборе и избавило от лишних сомнений. https://telegra.ph/Kukly-na-svadbu-v-Izraile-ceny-portfolio-i-zakaz-onlajn–obzor-12-16. Теперь я понимаю, чего ожидать от сервиса и как лучше подготовиться. 687CEC

Онлайн бонусы с промокодом поддерживают мобильные устройства https://atora.ru/blogs/acontinent/promokod-1khbet-2026-1x200mix-bonus-32500-.php Бонусные коды букмекеров онлайн

Промокоды для ставок без риска помогают оценить платформу http://www.mrkineshma.ru/support/forum/view_profile.php?UID=253985 Секретные бонусные коды

Бонусы без депозита идеальны для новичков http://dotstroy.ru/userinfo.php?uid=52049 Актуальные акции и промокоды

Киберспорт расширяют выбор ставок http://xn--80aeh5aeeb3a7a4f.xn--p1ai/forum/user/65507/ Актуальные бонусные коды

Want quick rewards? Use this bonus promo code and start betting with extra funds. No hassle and great for any sports enthusiast. http://metalmed.pl/kontakt/l3/#comment-112464 Instant betting bonus

Exclusive football promo — grab it now and enhance your bet instantly. Perfect for weekend matches and live odds. Use your code before it expires! https://cdsonla.edu.vn/index.php/vi/component/k2/item/23-harvard-takes-me-to-dubai?start=28 Bet promo code no wagering requirements

Start with extra cash by using this bonus promo code. It’s easy and gives you better odds for your bet. Apply today and experience instant rewards. https://eceaa.hkust.edu.hk/alumni-gathering-2023/ Sports betting bonus code no deposit needed

Le site web telechargement 1xbet propose des informations sur les paris sportifs, les cotes et les evenements en direct. Football, tournois populaires, cotes et statistiques y sont presentes. Ce site est ideal pour se familiariser avec les fonctionnalites de la plateforme.

Want to bet now? get your promo and play instantly with no delays. Just use the code, fund your account, and enjoy your bonus immediately. https://my.dev.vanderbilt.edu/tractem/2018/04/20/posterior-thalamic-radiation-ptr/ Top betting offers June 2025

Envie de parier telecharger 1xbet pour android est une plateforme de paris sportifs en ligne pour la Republique democratique du Congo. Football et autres sports, paris en direct et d’avant-match, cotes, resultats et statistiques. Presentation des fonctionnalites du service.

Limited time promo betting available now! Use your bonus promo code and place your bets on your favorite matches. Don’t wait — this offer expires soon! https://valencialife.es/the-globexs-guide-to-moving-to-valencia-becky-sanderson-the-happy-ever-after-retiree/#comment-330727 Bet and get free bets

Activate bonus instantly with this hot sportsbook promo. New users can redeem this betting gift to kick off right. Limited offer — don’t miss out on your reward. https://cdsonla.edu.vn/index.php/vi/component/k2/item/23-harvard-takes-me-to-dubai?tmpl=component&print=1&start=40 ГГУ имени Ф.Скорины Sports betting bonus code no deposit needed

Enjoy a fast payout betting bonus and get your winnings without waiting. Create your account and experience instant cashouts with our trusted sportsbooks. https://drc.uog.edu.et/tips-to-succeed-in-an-online-course/ New customer betting offer

Акции для активных игроков дают дополнительные возможности http://4asdaiprognoza.listbb.ru/viewtopic.php?t=7204 Бесплатный промокод для ставок

Киберспорт дают дополнительные бонусы http://fastdigest.ipborda.ru/viewtopic.php?t=1514 Бонусные предложения букмекеров

Бездепозитные бонусы позволяют безопасно участвовать в ставках https://stackoverflow.getbb.ru/viewtopic.php?t=1067 Промокод на первую ставку