If you’re reading this – you either:

- sweating as you can’t recover a data from a POD that was using a PV

- or.. looking for ways to safely delete PODs without affecting storage stored in a PV

Either way, came across the same dilemma while I was migrating my apps to argocd. Took awhile to search for this so I’m documenting for anyone wanted to have the solution. If the PV is already released, skip to number 3

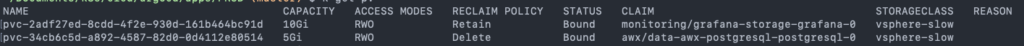

- First things first, make sure the PersistentVolume ReclaimPolicy is set to Retain. If it’s currently set to delete, you can easily patch the PV by issuing:

kubectl patch pv <your-pv-name> -p '{"spec":{"persistentVolumeReclaimPolicy":"Retain"}}'

2. Next, proceed to delete the resources that was using it. The status of the PV will then become Release after the PVC/POD holding it is now deleted.

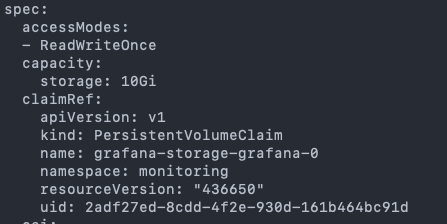

3. In a released state, edit the PV and remove spec.claimRef block

kubectl edit pv <pv name>

4. After removing the spec.claimRef block, the PV will be available. Proceed in re-adding your application

Hope that helps.

Enjoy!

NOTE: Other option is to clone the volume (depends on the storage provider):

https://kubernetes.io/blog/2019/06/21/introducing-volume-cloning-alpha-for-kubernetes/

CooperTNEl Paso

Pingback: 「Kubernetes」- 使用存储(学习笔记) - BLOG.K4NZ.COM

Thanks for the eye opener.

Say I was running a MariaDB-Galera statefulset and I then cleared my cluster and rebuilt it. Is it then possible to re-create the Galera cluster and have it re-use the PVC that’s still present on the NFS server? I plan on experimenting – especially now that I’m aware you can patch the PV – but thought I’d ask anyway as you may have already performed this experiment before.

Cheers,

ak.

i think as long as the PV is released and you reference the correct PVC to re-use it. you should be good.

One thing to note is, make sure the PV is used just for data of the DB. Depending on how the container/pod was using the mount, double check if it’s doing anything weird (like reinitialising the disk)

Thanks for getting back.

I actually experimented with this in my cluster and was able to get the cluster to reuse as you showed above. However, I was hoping we could use proper names to avoid having to edit out the ClaimRef. For instance, I have services that use a manual storage class and since I give them names that don’t have random characters, I do not need to edit out the claim reference as I can delete the link programmatically. I’ll have to see if I can play about with the Helm values to that end but that’s an exercise for a much later time.

Thanks again.

Pingback: 「Kubernetes」- 存储、数据持久化(学习笔记) - K4NZ BLOG

Pingback: 「Kubernetes」- PersistentVolume, PersistentVolumeClaim - K4NZ BLOG

@Gubi

Above procedure helped me to recover the PV from released to available.

Thank you for sharing the procedure.

Offering your house for funds and flipping residences for income can be an outstanding strategy to produce income in the housing market, but it requires detailed planning and readiness. Begin by accurately evaluating your home’s market worth, which you can achieve through professional evaluations, contrasts with alike residences, or web-based appraisal tools. Correct valuing is essential to prevent monetary deficits or prolonged selling times. Seeking a property professional can provide important guidance to assist you determine a competitive and realistic value based on current marketplace trends.

Before offering your house for money, concentrate on performing critical repairs and improvements, particularly in key spaces like the culinary space and bathrooms, which can substantially increase your home’s value and desirability. Making sure that your home is tidy and neat is important to luring possible purchasers quickly. Additionally, staging your home by organizing, arranging fixtures strategically, and introducing elegant ornaments can generate an inviting ambiance that enhances buyer interest. Hiring a professional house stager can moreover improve your property’s display.

For those seeking to flip homes for income, getting the appropriate investment is vital. Options such as traditional financial institution credits, private credits, and private funds are available, and it’s necessary to pick one that fits with your financial status and venture demands. Confirm you have a robust payback approach in order. Working with the right professionals, including housing agents, builders, inspectors, and law experts, is essential for a effective property revolving undertaking. These experts can aid in discovering the ideal estate, conducting renovations, examining property state, and handling law-related aspects, securing a efficient and lucrative revolving process. For extra information and resources on this topic, kindly check my favorite page.

If you decide to would like to pick up more info on this kind of subject matter go visit my favorite web-site:

how to sell a house fast for cash

The Pros and Cons of Selling Your House for Cash 40b8400

有趣的 旅行者门户网站, 保持 充满灵感。感谢! 白沙丘與藍湖 我尊重这样的项目, 写得很实在。这个页面 就是 最好的例子。很出色。

Thanks for your post. One other thing is that often individual states in the United states of america have their own personal laws that will affect home owners, which makes it quite difficult for the the legislature to come up with the latest set of recommendations concerning foreclosure on home owners. The problem is that a state provides own guidelines which may have interaction in a negative manner in terms of foreclosure procedures.