TKG Series

- [TKG Series – Part 1] VMware Tanzu Kubernetes Grid introduction and installation

- [TKG Series – Part 2] Install Kubernetes Cluster(s) using Tanzu Kubernetes Grid

- [TKG Series – Part 3] Creating custom plan in Tanzu Kubernetes Grid

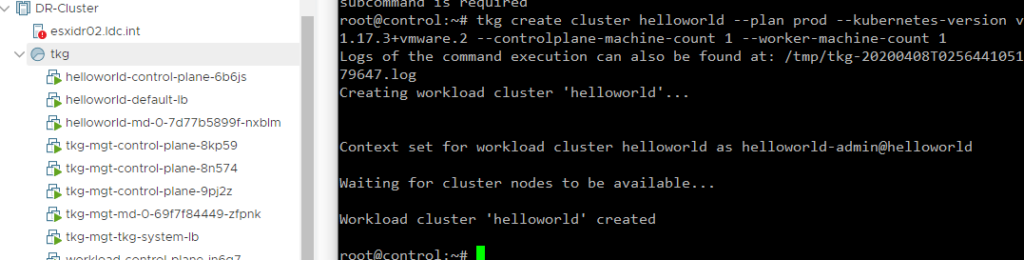

For my next post, we will now be installing kubernetes clusters using Tanzu Kubernetes Grid (TKG). With properly configured tkg, the command is straightforward:.

- Execute the command

- tkg create cluster <cluster name>

- –plan

- prod or dev

- this are ootb plan. you can create customized plan that will specify network, resources, datastore of deployed VMs.

- prod or dev

- –kubernetes-version

- dependent on what photon template is used

- –controlplane-machine

- how many master node

- –worker-machine-count

- how many worker node

tkg create cluster helloworld --plan prod --kubernetes-version v1.17.3+vmware.2 --controlplane-machine-count 1 --worker-machine-count 1

- Wait a couple of minutes – voila!

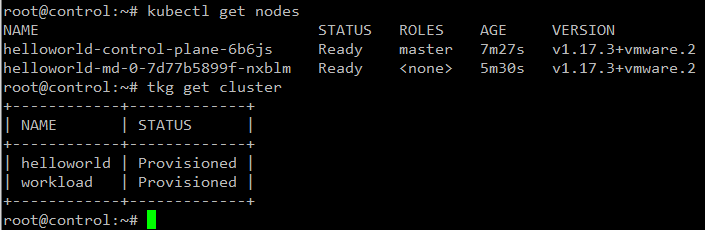

- You can verify the kubernetes cluster by issuing

kubectl get nodes

tkg get cluster

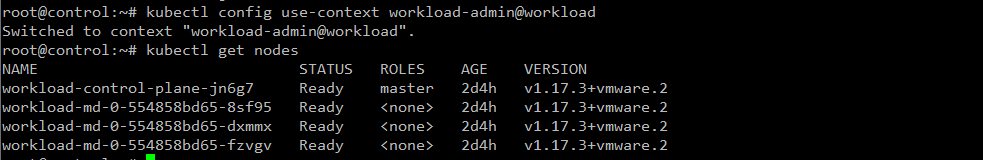

Switching kubernetes context

Once you have multiple kubernetes, you can switch context by doing the following

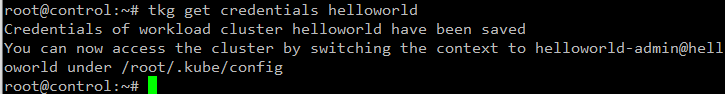

- Save kubernetes credentials provisioned by tkg

tkg get credentials helloworld

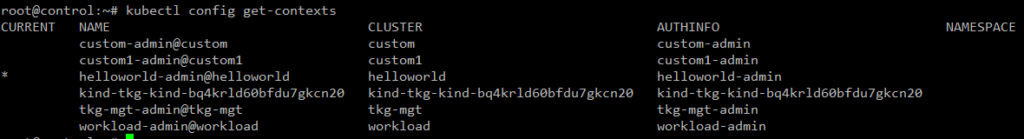

- Issue kubectl to get available contexts

kubectl config get-contexts

- Switch context

kubectl config use-context CONTEXT_NAME

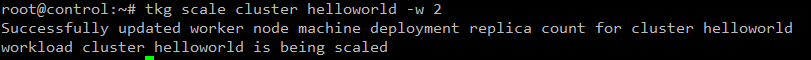

Scaling Kubernetes Cluster

tkg handles commands declartively in scaling kubernetes cluster. Meaning, the command you specified will be the “end-goal” of the cluster.

For example, to scale the helloworld cluster to 2 worker nodes, the following command can be issued

tkg scale cluster helloworld -w 2

To scale master nodes to 2 nodes and scale-in the worker nodes to 1 the following command can be issued:

tkg scale cluster helloworld -c 2 -w 1

TKG Terms:

Control Plane = Kubernetes Master Node

Worker = Kubernetes Worker/Minion Node

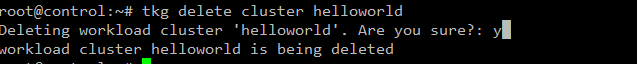

Destroy Cluster

The following easily destroys provisioned tkg kubernetes cluster. VMs are also deleted as part of the process

tkg delete cluster helloworld

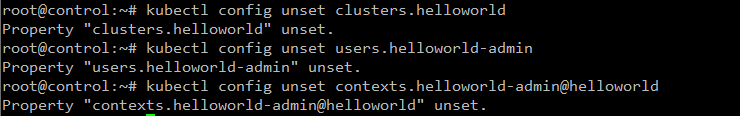

After deleting the cluster, you need to delete the kubectl config as well by doing the following:

kubectl config unset clusters.<CLUSTER NAME>

kubectl config unset users.<ADMIN NAME>

kubectl config unset contexts.<CONTEXT NAME>

In my next post, I’ll be describing how to create custom plans to customize the resources being consumed by tkg provisioned nodes.

Pingback: A private cloud – all for myself » [TKG Series – Part 3] Creating custom plan in Tanzu Kubernetes Grid